Abstract

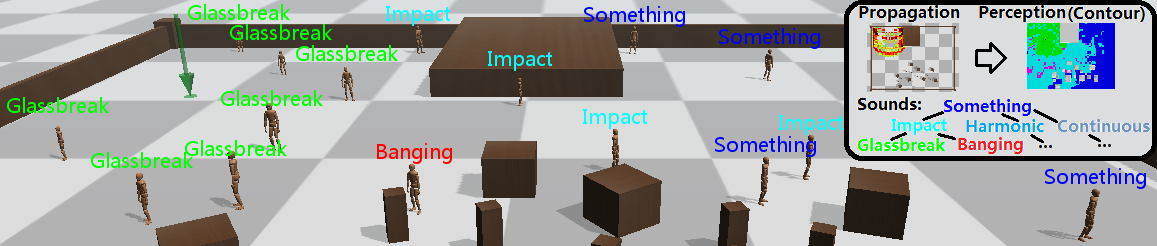

The perception of sensory information and its impact on behavior is a fundamental component of being human. While visual per ception is considered for navigation, collision, and behavior selection, the acoustic domain is relatively unexplored. Recent work in acoustics focuses on synthesizing sound in 3D environments; however, the perception of acoustic signals by a virtual agent is a useful and realistic adjunct to any behavior selection mechanism. Previous approaches to signal propagation and statistical models of sound recognition have been too computationally expensive to integrate into real-time autonomous agent simulations. In this pa1per, we present SPREAD, a novel agent-based sound perception model using a discretized sound packet representation with acoustic features including amplitude, pitch range, and duration. SPREAD simulates how sound features are propagated, attenuated, spread and degraded as they traverse the virtual environment. We also consider how packets at the same location combine and interfere with each other. Agents perceive and classify the sounds based on this locally-received sound packet set using a hierarchical clustering scheme, and have individualized hearing and understanding of their surroundings. Using this model, we demonstrate several simulations that greatly enrich controls and outcomes.

Related Articles

SPREAD : Sound Propagation and Perception for Autonomous Agents in Dynamic Environments

Pengfei Huang, Mubbasir Kapadia and Norman I. BadlerACM SIGGRAPH/EUROGRAPHICS Symposium of Computer Animation, SCA 2013 (To Appear)

Bibtex

Videos