Abstract

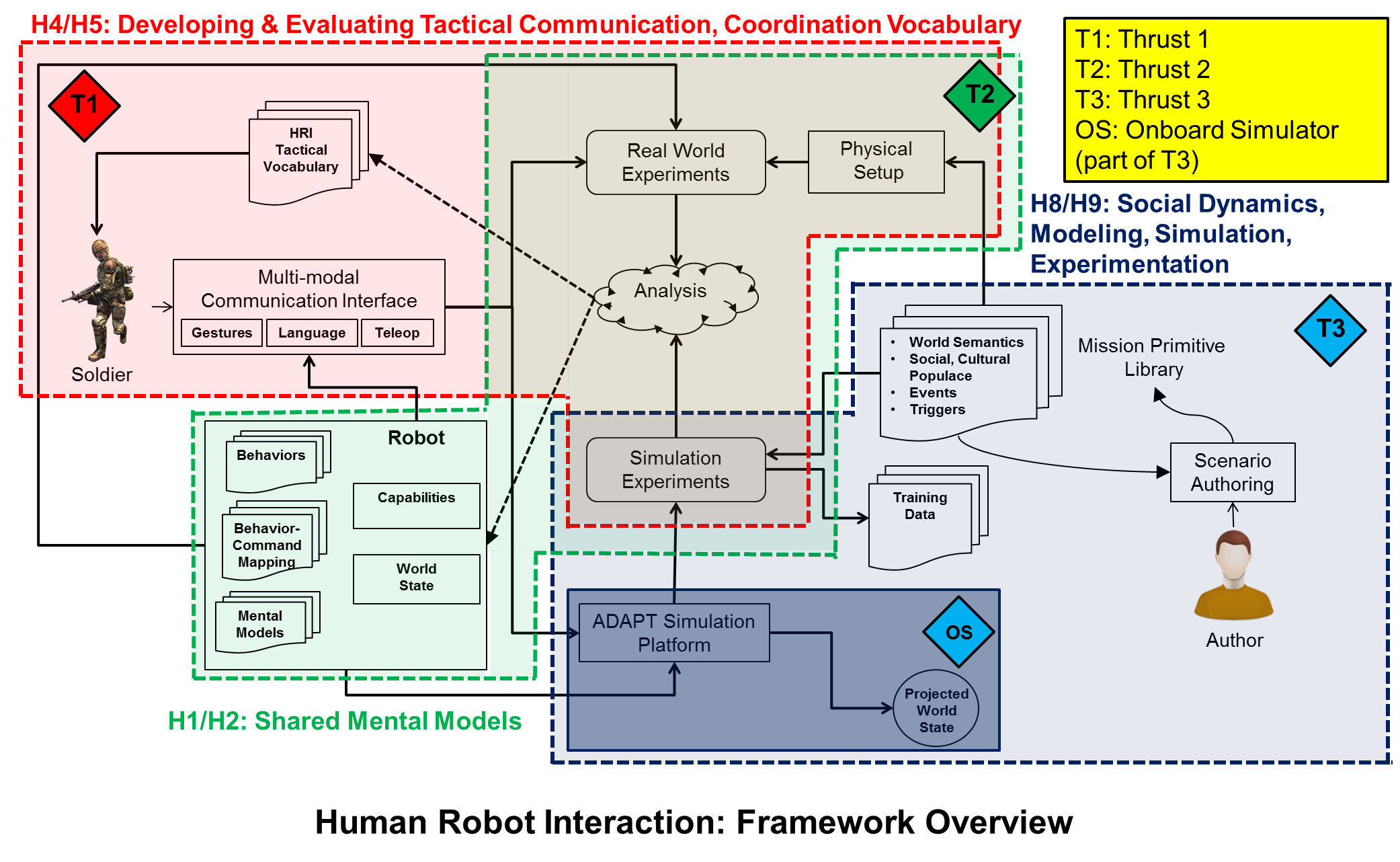

The RCTA is a large, multi-year, multi-partner project to build effective, efficient, intelligent and collaborative human-robot teams. Our team plays two roles in this effort:

(1) Multi-Modal Communication. This project is examining ways in which multi-modal input could be delivered to and interpreted by a robot. The environmental conditions affect, corrupt or render unusable various channels of communication and thus their signals. For instance, it may be difficult to hear an instruction for any number of reasons in a hostile environment. It is also sometimes more practical to use hand signals than the spoken word. Multi-modal channels of interest may include: speech, non-speech verbal utterances, arm gestures, body pose and motion, and head and eye movements. Our research will focus on the semantics and pragmatics of multi-modal signals in order to communicate task information, give commands to start, stop, and otherwise guide or control tasks of other entities, and reduce ambiguity within and mitigate the effects of interfering signals. In addition, multi-modal communications may be explicit or implicit: explicit communication is the purposeful conveyance of information through multiple modalities (i.e., audio, visual, tactile) that has a defined meaning, while implicit communication is the inadvertent conveyance of information about emotional and contextual state that will affect interpretation, thoughts, and behaviors.

The task objective is to develop a multi-modal tactical communication protocol for human-robot communication, and enable the preprocessing of raw tactical and mission relevant communication data to inform Perception and Intelligence (e.g., world model, ACT-R). Additionally, this capability will directly support mission relevant shared mental models (SMM) and contribute to all communication aspects of the capstone vision.

(2) Dynamics of operating within a social and cultural environment. Parameters of microscopic (i.e., individual) behaviors in a cultural context (e.g., a marketplace) where social interactions between members of same and different cultural units become significant (but not the only factor) in understanding what may be happening in the situation and what needs to be investigated (or ignored) by human-robot teams. Likewise, human-robot teams must be aware of the cultural meaning of their own actions in a foreign context.

The objective of this task is to provide a real-time interactive simulation of (for example) a Middle Eastern populace exhibiting indigenous cultural factors and behaviors while interacting with a human-robot soldier team. The deliverables of this task will provide a readily authorable interactive virtual and visual test bed for soldiers and robots to experience and interact with a Middle Eastern populace and facilitate experimentation and collaboration in a visually, socially, culturally relevant setting. The results of this subtask will enable HRI to study behaviors and predictive models of HRT on realistic missions set in a virtual marketplace characteristic of the Middle East. Authoring and recording variations in the simulation will provide valuable synthetic training data for Perception research. Finally, the ability to provide an interactive populace will provide a valuable test-bed to exercise and prototype robot and team cognition and tactical capabilities. Long term goals include the use of planning methods to generate simulations with global narrative constraints and unanticipated events (user input), and introduce parameters to control culture and character psychology at the animation layer.

Team Members

Mubbasir Kapadia (Post-doc, Team Lead)

Funda Durupinar (Post-doc)

Alexander Shoulson (PhD)

Pengfei Huang (PhD)

Cory Boatright (PhD)

Himanshu Masandh (Masters, CGGT)

Lauren Frazier (Masters, CIS)

Corey Novich (Undergrad, DMD)

Zia Zhu (Undergrad, DMD)

Nathan Marshak (Undergrad, CIS)

Justin Cockburn (Undergrad, CIS)

Yonas Solomon (Undergrad, CIS)

Related Projects