Exam 2 study guide

The one-hour study guide for exam 2

Latest update: Fri May 6 12:14:08 EDT 2016

Disclaimer: This study guide attempts to touch upon the most important topics that may be covered on the exam but does not claim to necessarily cover everything that one needs to know for the exam. Finally, don't take the one hour time window in the title literally.

Transport Layer

The network layer (layer 3 of the OSI stack) is responsible for machine-to-machine communication. The transport layer, one layer higher (layer 4), provides logical communication channels between applications. An application can create an arbitrary number of these channels, each of which has another endpoint on some process running on some host. Writing data onto this channel delivers it to the application that is reading data on the other end of this channel. The transport layer is responsible for implementing this abstraction. Routers in the network are unaware of this concept since they only provide network layer (machine-to-machine) services.

There are multiple transport protocols available on top of IP, including TCP, UDP, and SCTP. TCP and UDP are by far the most popular of these. Two responsibilities of the transport layer are multiplexing and demultiplexing communication channels on the network and, in some cases, implementing reliable data transfer.

Incidentally, a packet at the transport layer is called a segment; it is called a datagram at the network layer and a frame at the datalink layer. We send Ethernet frames, which contain datagrams that are routed by routers. These datagrams, in turn, contain segments that the transport layer of the operating system’s network stack processes.

Transport layer multiplexing and demultiplexing

Multiplexing and demultiplexing are the software mechanisms in place to combine data from multiple logical communication channels on a machine into a single stream of packets on the network and then separate a stream of incoming datagrams into the appropriate communication channels. This is important since communication on multiple sockets shares the same network connection. We can have multiple distinct streams at the transport layer that appear as a single stream of data to the network layer.

Multiplexing is the process of taking data from multiple communication channels (sockets) and sending it out of the machine as a stream of datagrams. Demultiplexing is the opposite process: separating the incoming stream of datagrams into the individual messages for the individual sockets to which each segment is targeted.

The key to IP transport layer multiplexing and demultiplexing is the use of port numbers. Each transport layer segment contains source and destination port numbers. A port number is a 16-bit number that has a unique association to a socket (a communication endpoint) on each host. Naming a socket, also known as binding, is the process of associating a socket with a specific port number and address. The address is the local host’s IP address, of course. In the case where a host has several network interfaces, it will have that many IP addresses and it is possible to make the socket available on only one of these interfaces. More commonly, though, a special address, INADDR_ANY, is used to associate a socket with all available network interfaces. Port numbers are usually specified explicitly for server programs since clients will need to know where to contact them. For example, an SMTP mail server will typically listen for client connections on TCP port 25. A client, on the other hand, will generally not care what port it uses and specifying port 0 is a request for the operating system to pick any available unused port number.

UDP multiplexing and demultiplexing

UDP is an extremely lightweight transport layer protocol on top of IP. Unlike TCP, it does not offer reliable message delivery and it does not guarantee that messages will be received in the order that they were sent.

An incoming frame (e.g., ethernet packet) contains a protocol identifier that identifies the payload (the data part of the frame) as IP data. When that payload is passed to the IP layer, a field in the header of the IP datagram identifies the higher layer protocol as UDP. The UDP layer reads the destination port field in the UDP header and delivers the segment to the socket that is associated with that port number. The kernel maintains a hash table of socket structures that is indexed by a key that is created from the UDP destination port. With UDP, any segments addressed to a specific port number will be delivered to the socket that is identified with that port. We will see that this is different from TCP, which takes performs full demultiplexing based on the source and destination.

While UDP does not have the reliability and in-order delivery advantages of TCP (or, as we shall see, rate adjustment to deal with congestion), there are several reasons that make it attractive for certain applications:

Segments are sent immediately. When a user writes data to a UDP socket, it immediately goes down the layers of the network stack and is transmitted onto the network. TCP may wait for an acknowledgement or for sufficient data in its transmit buffer instead of transmitting immediately.

Message boundaries are preserved. TCP treats a communication stream as a sequence of bytes. The number of writes to a socket does not necessarily correspond to the number of messages that will be received at the other end.

No connection setup overhead. With UDP, the first segment that is sent on the network can contain application data. With TCP, we first need to establish a connection with a three-way handshake, which requires an overhead of sending a segment and receiving an acknowledgement before we can send a segment with data.

UDP is stateless. The kernel has to keep track of sockets, of course, but does there is no need to keep track of sequence numbers, buffer for out-of-order data, acknowledgements, etc. This uses less kernel memory and makes error recovery and load balancing easier: requests can be redirected to other hosts spontaneously.

Smaller headers. UDP has an eight byte header compared to TCP’s 20-byte header. This leads to smaller packets on the network.

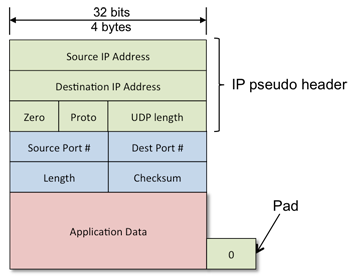

UDP header and checksum

The UDP header (Figure 1) is eight bytes long. It contains the source and destination ports, segment length and a checksum. The checksum is a simple error-detecting code that allows the UDP layer to check for segment corruption. If the received segment contains an error, it is dropped and not delivered to the socket. The checksum is computed over the UDP header, application data, and a pseudo IP header. The pseudo IP header contains a subset of fields from the IP header (source address, destination address, transport protocol ID, and UDP segment length). It is included in the checksum computation to ensure that the UDP layer will not get any misrouted segments (this is a safeguard but the IP header has its own checksum, which is computed in the same way).

The checksum is a 16-bit value. If the data being summed does not contain an even number of bytes (i.e., it does not have an integral multiple of 16-bit values), it is padded with a zero byte. The same ones’ complement algorithm is used to compute checksums for IP headers, UDP headers, and TCP headers. The value of the checksum field is set to zero during the computation. To compute the checksum, all 16-bit chunks of data are added together. Each time the addition of two numbers results in an overflow, a one is added to the result. Finally, the bits of the final result are inverted.

To validate the checksum, the receiver performs the same arithmetic, generating a checksum for the segment and pseudo IP header. Since the segment checksum is included in the header for this computation, the result for an error-free packet will be all ones (0xffff). This computation reliably detects single bit errors in the segment.

TCP multiplexing and demultiplexing

UDP offers only limited demultiplexing. Segments from multiple sockets (sources) that are directed to the same host address and port number are received by the socket on that host that is associated with that port number.

With TCP, a connected socket is associated is associated with four values:

- the sender’s source address

- recipient’s (destination) address

- source port

- destination port

Recall that with TCP sockets, a server first creates a socket whose sole purpose is listening for and accepting incoming connections. It does so with the listen system call. This socket is said to be in the LISTEN state. It will never be used for data transfer. Its only purpose is to accept incoming connections.

When an incoming TCP connection request arrives at the host, the kernel searches for a socket in the LISTEN state where the packet’s destination address and port match those of the socket (the address can be “any” - a wildcard). The kernel then creates a new socket. The remote address and port are copied from the TCP segment header onto the new socket structure. Once the connection is set up, this new socket is in the ESTABLISHED state, indicating to the kernel that it has a connection to another socket. Any incoming TCP data segment will go to the socket that is associated with the source and destination addresses and ports in the segment header.

Principles of reliable data transfer

Given that the underlying IP network does not guarantee packet delivery, if a transport layer protocol wants to provide reliable data delivery, it has to implement it via software. We will first look at evolving reliable data transfer (RDT) software in general before turning our attention to how it is implemented in TCP specifically.

If the underlying networking layer was indeed reliable, there would, of course, be no need for RDT. A sender would send a segment and a receiver would receive it and immediately deliver it to the application.

Stop-and-wait protocol

Let us now assume that all segments are received (this will not be a valid assumption in the real world of IP) but that some of them might have corrupted data. In this case, we may need to request retransmission of a segment because it arrived with errors. Automatic Repeat Request (ARQ) refers to a family of protocols that acknowledge received packets and request retransmission for bad packets.

An acknowledgement (ACK), also known as a positive acknowledgement, is a receiver feedback message that confirms the successful receipt of a message. A negative acknowledgement (NAK) is a feedback message that tells the sender that a message was not successfully received.

A simple protocol for providing RDT over a channel that always delivers segments but may introduce errors into them is to transmit one segment and wait for an ACK or NAK. A receiver sends an ACK if the segment was received without errors. Upon receipt of the ACK, the sender can transmit the next segment. A receiver sends a NAK if the segment was received with errors. Upon receipt of a NAK, the sender retransmits the same segment and again waits for an ACK or NAK. This form of ARQ protocol, where a segment will not be sent until the previously sent segment has been acknowledged, is called a stop-and-wait protocol.

The protocol we just outlined fails in that it recognizes that data can be corrupted in transit but does not take that possible corruption into account for ACK/NAK messages. We can modify the protocol by adding a checksum for ACK/NAK segments and, upon receipt, detect if those segments are corrupted. If corrupted, we will treat the message as a NAK and retransmit the segment. This can result in the receiver getting duplicate packets. If a receiver gets a duplicate packet, it will need to ignore the data but still send an ACK in return.

Now we need to distinguish new data from a retransmission. A sequence number allows us to do that. In the case of a stop-and-wait protocol, a one-bit sequence number suffices since we only need to distinguish between the current packet we’re waiting for and the retransmission of a correctly-received previous packet. This stop-and-wait protocol using a single-bit sequence number is called an alternating bit protocol.

Removing NAKs

We just saw two cases where a recipient gets a packet that it does not want: receipt of a duplicate packet (in which case it sends an ACK) and receipt of a corrupted packet (in which case it sends a NAK and awaits retransmission). We can remove the need for a NAK by using an ACK and adding a sequence number to it. The ACK will acknowledge the last packet that was correctly received. If the sender receives an ACK for a different number than the packet it most recently sent, it will treat that as a NAK and retransmit the packet.

RDT over a lossy channel

So far, we only considered the case where packet data might be corrupted but the packets were always delivered to their destination. Now we need to account for the fact that packets may be lost. This can be due to overflow of a queue at a router or to data corruption in the packet header that prevents the packet from being routed to its destination.

We place the burden of detecting a lost packet on the sender. The sender will not get an acknowledgement from a receiver in the case that a packet was never delivered or in the case that it was delivered but the acknowledgement message was lost. To detect this lack of acknowledgement, the sender will use a countdown timer. The timer is initialized when the packet is sent. If it times out before an acknowledgement is received, the sender retransmits the packet and reinitializes the timer. The timer should be set to some value that is longer than the average round-trip time so that the timeout will indicate a likely loss. Setting the timer to a too-short value will result in excess duplicate packets. Although the protocol can deal with them, we’d like to avoid an excessive amount of unnecessary retransmissions.

Pipelining

A stop-and-wait protocol will not transmit a packet until the previous packet has been successfully sent and acknowledged. Having to wait a round-trip delay before sending the next packet yields horrible network utilization, often far less than 1%. Recall that network utilization is the ratio of the actual traffic on the network to the traffic that the network can support.

A way to improve network utilization dramatically is to send successive packets without first waiting for acknowledgements of earlier packets. This technique is called pipelining and we will look at two approaches to pipelining: Go-Back-N and Selective Repeat. In order to send multiple packets without first waiting for an acknowledgement from each one, we will need to increase the range of sequence numbers so that we can identify packets and match an acknowledgement to a specific transmitted packet. We also need to save packets on the transmitter until they have been acknowledged by the receiver in case we need to re-send them. If the receiver gets out-of-sequence packets, it cannot deliver them to the application and may need to consider storing them in a receive buffer or else requesting those same packets from the sender again in the future.

Go-Back-N (GBN) Protocol

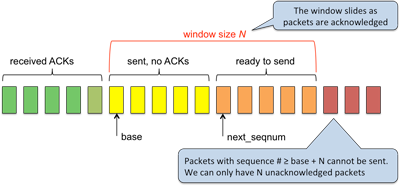

The Go-Back-N protocol allows a sender to send multiple packets without waiting for an acknowledgement. Each successive packet has a monotonically increasing sequence number. A window size defines the maximum number of packets that could be transmitted before waiting for acknowledgements. The base of the window is the earliest packet that has been sent but not yet acknowledged. When an acknowledgement is received for a sequence number that corresponds to that packet, it can be discarded (the sender will never need to retransmit it) and the window advances, or slides to the next unacknowledged packet and the new packet that entered the window can now be transmitted. This is why Go-Back-N is called a sliding window protocol.

The sender sends all packets that fall within the current window and starts a timer. The receiver expects to receive packets in the correct sequence number order but it may not get that. Whenever a packet is received correctly and is in the proper sequence, that packet is acknowledged with its sequence number and delivered to the application. The expected sequence number is incremented and the receiver waits for the next packet.

If the receiver gets a packet that has a different sequence number, it discards the packet and sends back a duplicate acknowledgement (that is, the acknowledgement for the previous sequence number it received). An acknowledgement number n indicates that the receiver has correctly received all packets up to and including packet n. This form of acknowledgement is called a cumulative acknowledgement. The receiver only needs to keep track of the next sequence number it needs and only stores one packet at a time.

When the sender receives an acknowledgement n, it advances the base of its window to n. Packets less than or equal to n can be discarded. Note that there is no harm in losing acknowledgements less than n; this acknowledgement indicates that all prior packets were received as well. If the acknowledgement number corresponds to the last packet that was sent, the sender has all outstanding acknowledgements and can stop the timer. Otherwise, the timer is restarted to wait for additional acknowledgments. If the timer expires, that means that some all transmitted packets have not been acknowledged; one or more packets have been lost (or the final acknowledgement has been lost). Upon timer expiration, the sender sends all packets that are in the current window.

Selective Repeat (SR) Protocol

With the Go-Back-N protocol, many packets can be in the pipeline: sent but not yet acknowledged. A single error in one of these packets will result in a timeout at the sender and hence a retransmission of all packets in the sender’s window. This can result in a lot of unnecessary retransmissions since the receiver will be getting packets that it has previously received correctly but discarded.

Selective Repeat (SR). Selective repeat, like Go-Back-N, is a sliding window protocol but allows the receiver to store and acknowledge out-of-order packets so that they do not need to be retransmitted.

Instead of cumulative acknowledgements, the receiver sends an acknowledgement for the specific packet that was received. The sender’s window slides when the earliest packet in the window is acknowledged and always starts at the first unacknowledged packet. The window itself may contain a mix of acknowledged and unacknowledged packets.

The receiver must also maintain a window since it may receive packets out of sequence and needs to buffer them. Whenever a packet is received in sequence, it can be delivered to the application. The receive window slides to the slot for the first non-received packet.

Every transmitted packet has a separate timer associated with it. If an acknowledgement is not received successfully within that time, that specific packet is retransmitted. The receiver will acknowledge the packet if it fits in the window or if it is sequenced before the window. This latter case means that the packet is a duplicate of a packet that was already received and delivered to the application. If the packet is beyond the receiver’s window, it has no room to accept the packet and will ignore it.

Transport Layer: TCP

TCP, the Transmission Control Protocol, is the dominant transport protocol on the Internet. Where UDP was a thin layer over IP that provided us with multiplexing and a limited demultiplexing service (the source host was not factored into the demultiplexing - that’s up the the application to process), TCP provides applications with a reliable, bidirectional communication channel. In addition, TCP attempts to be a good network citizen and manage the flow control of data to ensure that the receiver’s buffer does not overflow and to avoid network congestion.

TCP is a connection-oriented protocol. This does not mean that a virtual circuit is established in the network. Indeed, routers are not aware of TCP any more than they are of UDP or other transport layer protocols. TCP is a form of protocol design called end-to-end control, where only the endpoints are responsible for the integrity of the connection. Routers do not guarantee not to drop packets, ensure the are sequenced correctly, or correct errors. The “connection” is managed in software at both end systems. Because the hosts need to keep track of the state of the communication channel, TCP has an initial connection setup step that comprises a three-way handshake where parameters such as port numbers, sequence numbers, and buffers are established. Once the connection is established, all messages are acknowledged and retransmitted if lost. When the session is complete, TCP enters a teardown phase to ensure both sides are informed that the connection is no longer needed and that they can free up resources that were used for the connection.

TCP’s communication is full duplex. This means that if a process A established a TCP connection to process B then process B can use the same connection to send data to process A.

TCP Segments

TCP sends segments between the sender and receiver. A segment is simply the transport layer term for a packet. The sending process writes data to a socket. This data is copied to the operating system kernel and ends up in TCP’s send buffer, a pool of memory devoted to that specific connection. TCP reads chunks of data from this send buffer, creates TCP segments, and sends them down to the IP layer for transmission onto the network (the IP layer will, in turn, send the IP datagram to the data link layer, which will actually get it to the network). When an IP datagram arrives at the destination machine, a protocol field in the IP header identifies the message as a TCP message and IP forwards it up to the TCP driver. TCP then examines the source address, source port, destination address, and destination port for find the appropriate socket for this segment. The data is placed in that socket’s receive buffer, the counterpart to the send buffer that is a pool of memory used to hold received data that did not yet make it up to the application.

Note that, unlike in UDP, TCP views the data coming from the application as a stream of bytes rather than individual messages that need to be sent out. There is no assurance that writing, say, 20 bytes to a socket will result in 20 bytes of data being transmitted in a TCP segment. If there was other data in the send buffer that was ready to transmit, it could be combined with this new data. Similarly, the 20 bytes may not be transmitted immediately but combined with additional data that is written onto that socket.

Maximum Segment Size

When the TCP driver reads bytes from data in the send buffer to create outgoing segments, it makes sure that the number of bytes is grabs is less than the maximum segment size (MSS) to make sure it does not try to send a segment larger than the underlying network can transmit. Data link layers have a Maximum Transmission Unit (MTU), the largest payload that they can carry. For a TCP segment to fit into a data link frame, the IP header, TCP header, and application data must be no larger than the MTU. Ethernet supports an MTU of 1500 bytes (with support for an MTU of 9,000 bytes for jumbo frames in gigabit ethernet) while 802.11 (Wi-Fi) supports a 7981-byte MTU. Since IP and TCP headers are each 20 bytes long, the MSS is typically the MTU minus 40 bytes. Hence, a common MSS on an ethernet network is 1460 bytes (1500–40).

Path MTU Discovery

It is easy enough for the TCP layer to find out the MTU of the local link layer, subtract 40, and compute a value for the MSS. While this is fine for communication within the LAN, this does not ensure that some link that the packet traverses to the destination will not have a smaller MTU, resulting in the need to fragment the packet (which is undesirable). The path MTU is the minimum MTU of all the hops along the path the destination. Unfortunately, there is no foolproof way of determining this value. IP networks are required to support an MTU of 576 bytes (512 bytes of data plus up to 64 bytes for headers). However, most network links can support larger MTUs (for example, and MTU of 1500 bytes works on practically all routers in the Internet but support is not guaranteed).

Path MTU Discovery (RFC 1181 and RFC 1191) defines a method for a host to discover the path MTU and hence set its MSS to the maximum possible value.

It works by initially assuming that the path MTU is the MTU of the first hop (this is local to the host and easy to find). All initial datagrams are sent to the destination with the “don’t fragment” (DF) bit set in the IP header. If a router needs to route the datagram to a link with a smaller MTU, the router will discard the datagram and send back an ICMP[1] datagram containing an ICMP Destination Unreachable message with a code indicating fragmentation needed. The MTU of the outbound link is placed in the ICMP message. Upon getting this response, the sending host reduces its MTU to the returned value and tries again. Since routes may change periodically, the Path MTU process is repeated periodically (every 10 minutes on Linux and Windows systems by default).

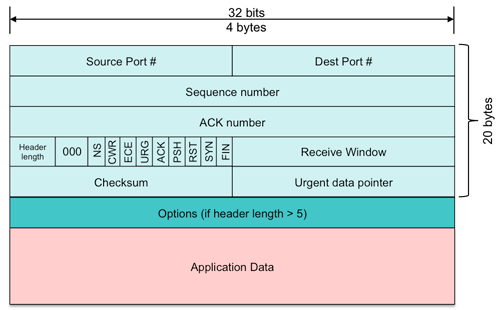

TCP structure

A TCP segment comprises at least 20 bytes of a TCP header followed by a variable number of bytes of application data. The TCP header is prefixed by an IP header that, in turn, is encapsulated in a link layer header.

Some of the key parts of the TCP header are:

- Source port number

- Identifies the port number of the sender’s socket.

- Destination port number

- Identifies the port number of the receiver’s socket.

- Checksum

- Like UDP, this is a 16-bit 1s complement sum of the TCP header, application data, and IP pseudo header. The IP pseudo header is a subset of the fields in the IP header: source address, destination address, protocol ID, and the length of the TCP header and data. All the data is added in 16-bit chunks. Any overflow carries result in a 1 added to the result. The final result is complemented (bits inverted). When the receiver performs the same operation and includes the checksum field, the result for an uncorrupted packet is all 1s (0xffff).

- Sequence number

- Every byte that is transmitted is counted starting from some initial sequence number. The 32-bit sequence number of the TCP segment is the number of the first byte in the data. The sequence number is an essential part of TCP’s reliable data transfer service.

- Acknowledgement (ACK) number

- A receiver sends back a 32-bit acknowledgement number that is the sequence number of the next byte that it expects to receive. This too is an essential part of TCP’s reliable data transfer service.

- Receive window

- The receiver tells the sender the maximum number of bytes that it is capable of receiving. This 16-bit value takes priority over the MSS (maximum segment size).

- Header length

- The header length indicates the number of 32-bit words in the TCP header. Note that the IP header contains the total datagram length, which can be used to determine how much data is in the message. The basic TCP header is 20 bytes and the minimum value of the header length is hence 5 (20 bytes = 5 32-bit words). TCP supports optional data in its header and this may make the header larger.

- TCP Options

- If the header length is greater than 5, that means there are more than 20 bytes in the header and TCP options are present. TCP options are located at the end of the normal 20-byte header. While approximately 32 different options are defined, some are obsolete and many are never used. The most commonly used options include:

- Maximum Segment Size: defines the maximum segment size that will be used during a connection between two hosts.

- Window Scaling: extends the number of bits for the receive window to allow the TCP window size to be specified as a 30-bit number instead of a 16-bit number.

- Selective Acknowledgements: allow the receiver to inform the sender if it received any out-of-order segments.

- Timestamps: an extension to allow more accurate mechanism to measure segment delivery time, including retransmissions.

- Flags

- A number of 1-bit flags are present in the header. These are:

ACK: informs the recipient of the segment that the acknowledgement field contains a valid sequence number.

RST, SYN, FIN: These are used to set up and tear down the TCP connection. The SYN (“Synchronize”) flag is used in the handshake used to set up a connection. The FIN (“Finish”) flag is used to close a connection. The RST (“Reset”) flag indicates that a segment was received for a closed or nonexistent socket.

PSH (“Push”): Tells the receiver to pass the received data to the application layer immediately. This flag is not used in practice.

URG (“Urgent”): Tells the receiver that the application data contains a region of “urgent” data, possibly along with “non-urgent” data. The 16-bit urgent data pointer is an index to the last byte of this data. As with PSH, the concept of urgent data is not used.

NS (“Nonce Sum”), CWR (“Congestion Window Reduced”), and ECE (“Explicit Congestion Expected”) are all part of an Explicit Congestion Notification protocol, which is an extension to IP. Not all routers support this and we will not cover this extension.

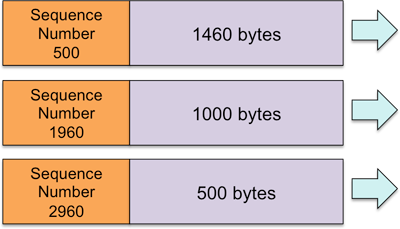

TCP Sequence Numbers

TCP views application data as a sequence of bytes and each byte in the sequence is assigned a sequence number. The sequence number in each TCP segment is the sequence number of the first byte in that segment. For example, if the current sequence number is 500 and we transmit a segment containing 1460 bytes, the segment will contain a sequence number of 500. If the following segment contains 1000 bytes, the sequence number of the segment will be 1960, which is 500 (the last sequence number) plus 1460 (the number of bytes that was sent with the last segment). Sequence numbers are 32-bit values and do not have to start with 0. The value may wrap around the 32-bit boundary, so all sequencing is done modulo 232 (mod 4,294,967,296).

TCP Acknowledgement numbers

Received TCP segments are acknowledged by the receiver. TCP uses pipelining and permits several segments to be sent at once prior to waiting for an acknowledgement (more on this later).

An acknowledgement number is present in a received segment and is a 32-bit number that indicates the sequence number of the next byte that the remote host is expecting to receive next. For example, in the above example we sent 1460 bytes with a sequence number of 500. Upon receipt of this segment, the return segment will contain an acknowledgement number of 1960, the sequence number of the next byte that the receiver needs. The message it just received contained bytes numbered 500 through 1959. When the receiver receives the next segment, 1000 bytes with a sequence number of 1960, it will return an acknowledgement number of 2960.

It would be a waste of network resources to send back a TCP segment containing nothing but an acknowledgement number. While this is inevitable in some cases, if the receiver happens to have data to transmit back to the sender, the acknowledgement number is simply set in the TCP header of the transmitted segment, completely avoiding the need to send a separate acknowledgement. Using an outgoing data segment to transmit an acknowledgement is known as a piggybacked acknowledgement.

TCP uses cumulative acknowledgements. The acknowledgement number that a receiver sends inside a TCP header is always the sequence number that the receiver wants to see next. Going back to the earlier example, if a receiver receives 1460 bytes with a sequence number of 500, it sends back an acknowledgement for the next byte it wants: 1960. Suppose that the next segment it receives contains 500 bytes with the sequence number 2960. This means that the desired segment was either lost or will be arriving out of sequence. Upon receiving the segment with sequence number 2960, the receiver generates an acknowledgement (all segments get acknowledged) but the acknowledgement number is the number of the earliest sequence it does not yet have: 1960. Hence, the sender will get back duplicate acknowledgements for 1960.

To avoid sending too many data-free acknowledgement segments, a receiver is allowed to wait up to 500 ms (half a second) before sending an acknowledgement. If another segment comes in during that interval, a cumulative acknowledgement must be sent. For a steady stream of messages, therefore, cumulative ACKs need to be sent for every other packet. However, any out-of-order segments must be acknowledged upon receipt.

A receiver does not have to store segments that were received out of sequence but it is more efficient to do so and practically every implementation of the TCP protocol does this. Continuing our example, suppose that the receiver does get the segment containing sequence number 1960 after receiving the segment withe the sequence number 2960; the segment really did arrive out of order and was not dropped. Now the receiver can fill in its hole in the receive buffer. It just got bytes 1960…2959 and it already had bytes 2960…3459 from the earlier receipt of the segment with sequence number 2060. The acknowledgement it sends now will be the cumulative acknowledgement – the sequence number of the next byte it needs: 3460. The sender will see acknowledgements of 1960, 1960, and 3460. Had the transmitted segments arrived in order, the acknowledgements would have been 1960, 2960, and 3460.

TCP Connection setup and teardown

Connection setup

TCP employs a three-way handshake to set up a connection: a process of SYN, SYN-ACK, and ACK. The client initiates the process; it creates a random initial sequence number (client_isn) and sends it to the server in a TCP segment with the SYN flag set. The server receives this and allocates send and receiver buffers as well as variables. This set of data, containing all the information about a connection, is called the transmission control block (TCB). The server then creates a SYN-ACK segment that acknowledges the received sequence number and contains a random sequence number from the receiver. This segment also has the SYN bit set. Upon receiving this, the client acknowledges the server’s sequence number by sending the final ACK segment to the server and allocates its own TCP buffers and variables for the connection.

SYN flooding

Kernel memory is finite and the operating system will not allocate an unlimited amount of memory for managing TCP connections. A denial-of-service attack called SYN flooding sends a large number of SYN segments to a machine but usually uses an unreachable return address to never complete the handshake to set up a legitimate connection. The recipient normally allocates memory for each SYN segment that it receives, expecting each to become a legitimate connection. Eventually, kernel memory is exhausted and the operating system will not allow any more incoming TCP connection requests, including, of course, legitimate ones. The operating system will continue to refuse incoming connections until those incomplete ones time out. The connection setup timeout is an administrator-configured value and can range from half a minute to several minutes.

Several approaches have been proposed to deal with SYN flooding. One of these is SYN cookies. The key realization is that the kernel allocates memory (the TCB) before the connection is fully set up. With the technique of SYN cookies, no state is saved (no memory allocated) upon the receipt of a connection request. Instead, any needed information is encoded into the initial sequence number. Since that sequence number (+1) will be sent back in the final ACK from the client, the server will be able to validate that the ACK, and hence the requesting client, is legitimate. The initial sequence number that the server creates is a hash of the source and destination IP addresses, ports, and some secret value known only to the server. A client will not be able to spoof this value since it does not know the secret but a server can easily validate it from the acknowledgement number in the ACK message from a legitimate client.

MSS announcement

TCP provides an option during connection setup to tell the other side its maximum segment size (MSS); that is, the largest size segment that it is willing to accept. If both machines are on the same LAN, the MSS is likely to be the MTU of the network interface minus the size of the protocol headers (20 bytes for IP and 20 more bytes for TCP), although it can differ even here if, for example, one device supports jumbo (9000-byte) Ethernet packets and the other does not. The Internet requirement is that all IP routers support an MSS of at least 536 bytes.

Invalid messages

If a host receives a TCP segment where the port numbers or source address to not match any connection (e.g., the socket is closed or there is no listener on that address), it will send back a reset segment, a TCP segment with the RST flag set. In the case of UDP, an attempt to send a message to a port that does not have any process listening on it will result in the generation of an ICMP message back to the sender.

Connection teardown

Either the sender or the receiver can decide to terminate a TCP connection. Termination involves telling the other side that you are finished sending data, getting an acknowledgement, and then freeing allocated resources for that connection.

This is a two step process between the two hosts (let’s call them A and B). The the host that initiates the teardown, host A, sends a finished message: a TCP segment with the FIN flag set. It then enters the FIN_WAIT_1 state and waits for an acknowledgement from host B. This FIN message is a promise that host A will not send any more data on the connection. Host B sends the acknowledgement and enters the CLOSE_WAIT state. It may still have data to send, however. Upon receiving the acknowledgement, host A enters the FIN_WAIT_2 state and waits for host B to terminate its side of the connection. Host B does the same thing that host A did: once it has no more data to send, it sends a TCP segment with the FIN flag set and enters the LAST_ACK state, which means it is waiting for the final acknowledgement from host A. When host A receives the FIN message, it knows that it will receive no more messages from host B. It sends the final ACK to host B and enters the TIME_WAIT state, which is a timeout period to ensure that host B receives the final ACK and there are no stray packets for that connection in the network.

Timeouts

Since TCP does not know about the congestion or performance of the underlying network and the network does not provide any guarantees for packet delivery, TCP relies on a time limit to determine if any transmitted segments were lost. If an acknowledgement is not received within a specific time limit then the sender may assume that the segment was lost. Since IP provides no guarantees on the timeliness of delivery, this retransmission timeout (RTO) can only be a best guess. We need a value that is long enough to avoid excessive timeouts and retransmissions but is short enough to avoid excessive waits.

TCP keeps track of the average round-trip time of segments. Since these may fluctuate over time, TCP uses an exponentially weighted moving average (EWMA), which places approximately 10–20% of the weight on the most recent RTT measurement. This average of the RTT is called the smoothed round trip time, or SRTT.

The second thing that TCP keeps track of is how much the recently measured round-trip time deviated from the SRTT. This too is is an EWMA function, placing approximately 25% of the weight on the most recent deviation. The average delay is called the round-trip time variation, or RTTVAR. It is an estimate of how much the RTT typically deviates from the average RTT and is an approximation to the standard deviation, which would be slower to compute.

The retransmission timeout (RTO) value is set to a function of the SRTT and RTTVAR:

timeout interval = SRTT + 4 * RTTVAR

However, whenever a timeout occurs, this value is doubled. The timeout value is doubled for each timed-out retransmission up to a value of 64 seconds. This doubling of the timeout is called an exponential backoff. Whenever an acknowledgement is received without a timeout, the timeout interval is reset to its base value.

TCP Reliable Data Transfer

When an application writes data to a socket, the data is copied over to the send buffer for that socket’s TCP connection. Some time later (the time is not specified in the standards), TCP will grab data sitting in the send buffer and create and transmit one or more TCP segments, each with the appropriate sequence number. The first of these segments will cause the RTO timer to be set. Whenever any segment containing an acknowledgement from the receiver arrives, the timer is reset. If the acknowledgement number is greater than the sequence number of the base of the send buffer, the sender can remove all bytes prior to that of the acknowledgement number from the send buffer since it knows that the receiver has them. If a timeout occurs, TCP will retransmit only one segment, the non-acknowledged segment with the smallest sequence number, double the timeout interval, and restart the timer.

Let us consider a few cases to illustrate how this works.

Lost ACK

If an acknowledgement is lost and the sender times out (meaning that there were no additional segments sent), the sender will retransmit that segment. The receiver, upon getting it, will see that it does not need that sequence number (it is a duplicate segment) and will discard the segment but will send an acknowledgement back to the sender.

Another case is when the sender sent two segments but the acknowledgement to the first one was lost. Because acknowledgements are cumulative and the sender gets the second, cumulative acknowledgement, it knows that the sender has received all the bytes and a retransmission is not necessary.

Delayed ACKs

If the network round-trip time suddenly became much longer than expected, acknowledgements to a sequence of segments might arrive after a timeout. When the timeout occurs, the sender will retransmit only the earliest unacknowledged segment and restart the timer. Suppose now that acknowledgements for the previously transmitted segments arrive. TCP will process them and adjust the base of its send buffer if those bytes are no longer needed. When the receiver gets the duplicate packet, it will discard it but send an acknowledgement. Some time later, the sender will receive this acknowledgement but see that it is a duplicate and hence discard it.

TCP Fast Retransmit

As we have seen, TCP uses pipelining and can send multiple segments before waiting for acknowledgements. If a receiver detects a missing sequence number, it means one of two things: a segment was lost (either discarded due to queue overflows or due to data corruption) or that a segment is delivered out of sequence. TCP does not use negative acknowledgements but sends an acknowledgement for every received out-of-sequence segment with the sequence number of the next byte it needs. In the case where a segment has indeed been lost, every segment after that will be acknowledged with the same sequence number. Hence, the receiver will see a stream of duplicate acknowledgements.

If TCP receives three segments with duplicate acknowledgement numbers, it assumes a segment was lost (the assumption is that it is unlikely that one segment was routed or delayed such that three others arrived first). Instead of waiting for an RTO to occur, TCP will transmit that missing segment (the one with the sequence number of the ACK). This technique is called a fast retransmit because the protocol retransmits the segment without waiting for the RTO to occur.

Selective Acknowledgements

TCP behaves in a similar manner, but not quite the same, as the Go-Back-N protocol. The sender only keeps track of the earliest sequence number that has been transmitted but not acknowledged. A distinction is that, in the case of a timeout, Go-Back-N will retransmit all segments in its window while TCP will only retransmit the lowest (earliest) segment.

With this behavior, having the receiver store out-of-order segments is optional. The receiver may store them. If it does, and the receipt of a segment fills in a hole (missing segment), then TCP will send back a cumulative ACK, ensuring that the sender will not have to retransmit those segments. However, the sender does not know if this will happen and has to hold on to all unacknowledged segments in its send buffer just in case any or all of those segments have to be resent.

An optional enhancement to the TCP protocol uses the options field in the header to allow the receiver to send over a list of {start byte, end byte} values to identify the specific range of bytes that have been received. These are called Selective Acknowledgements and make the protocol behave like a Selective Repeat protocol. The acknowledgement number in the TCP header is unchanged but the list of received byte ranges allows the sender to remove those enumerated segments from its send buffer since it knows that they will never have to be retransmitted.

Receiver-regulated flow control

It would be pointless to keep sending data to the receiver if the receiver’s application isn’t keeping up with reading it. In addition to slowing down transmission due to congestion (which we will look at next), TCP allows the receiver to tell the sender to tell it how much space it has in the receive window (the free space in the receive buffer). It does this by placing the size of the receive window into the receive window field in the TCP header in each segment that is sent to the sender.

One problem that can arise is if the receive window size is zero, the sender would stop transmitting and not get feedback from the receiver once the window size became bigger when the application consumed some data. The feedback is absent because the receiver already sent acknowledgements for all received segments. To remedy this, the sender uses probing: if the receive window is zero, the sender will periodically send a message with one byte of data. The receiver does not have to accept this data if the window size is truly zero (the sender will retransmit) but this gives the receiver a chance to send an acknowledgement segment with a non-zero receive window once there is room in the buffer.

TCP Congestion Control

TCP tries to be a good network citizen and decrease the rate at which a sender sends traffic based on its perception of congestion in the network. This is in addition to TCP’s flow control where a recipient can tell a sender to send less data by giving it a smaller window.

TCP uses a sliding window approach to flow control. The way that TCP controls the rate at which data is sent on a network is by adjusting the size of its sending window. Recall that a window size regulates the number of bytes that can be sent before waiting for acknowledgements from the recipient. We have seen that a window size of one MSS gives us a stop-and-wait protocol where we can send out only one segment before waiting for an acknowledgement for the receipt of that segment. In general, the transmission rate is the window size divided by the round-trip time. TCP’s use of the sliding window algorithm makes the protocol self-clocking: packet transmission is timed by the receipt of acknowledgements; each acknowledgement slides the window.

A TCP connection receives a maximum size that the receiver can accept in the receive window field of the TCP header (abbreviated as rwnd). In addition to that, the transmitter will dynamically adjust its transmit window size based on its guess of network congestion. This window size is called the congestion window, abbreviated as cwnd. At any point in time, the maximum window size will be the smaller of these two windows: rwnd and cwnd.

IP provides no guarantees on the accuracy or timeliness of end-to-end packet delivery through the network, nor does it provide any data on the levels of traffic in the network or queue sizes at routers. TCP, therefore, relies on making assumptions on congestion based on observed behavior. If an RTO occurs or three duplicate acknowledgements are received, the protocol assumes a segment was lost. Segment loss often implies congestion, so TCP will decrease its transmission rate by reducing the size of its congestion window, cwnd. On the other hand, if TCP gets acknowledgements for sent packets then there is no packet loss and therefore no congestion. In this case, TCP will increase its transmission rate by increasing the size of its cwnd. This continuous approach of increasing the transmission rate until packet loss occurs and then decreasing it is called bandwidth probing. The connection tries to see if it can transmit faster, backing down when problems arise, and then trying again.

AIMD

TCP’s congestion control is called Additive Increase / Multiplicative Decrease (AIMD). While a connection is experiencing no packet loss, it will increase its congestion window by one MSS every round-trip time (RTT), hence increasing its transmission rate. This is an additive increase (linear increase). If cwnd was 15 segments and all 15 segments were sent and acknowledged, the window is then increased to 16 segments.

In practice, we don’t wait for all the segments in the window to be sent out before increasing the windows size. We increase the window size (cwnd) fractionally for each arriving ACK. The number of segments that fit into a window is cwnd/MSS. After that many segments have been delivered, we want to increase cwnd by one segment (MSS bytes). That means for each ACK (each received segment), we will increase cwnd by this fractional amount: MSS divided by segments_per_cwnd, or MSS / cwnd/MSS. The assumption is that we always have data to transmit so every segment will be of size MSS.

If TCP feels that it has congestion (because of lost segments), the congestion window is halved. This is a multiplicative decrease. AIMD is a necessary condition for TCP congestion control to be stable in the system as a whole and ensure that some connections do not end up monopolizing the network link.

States of TCP congestion control

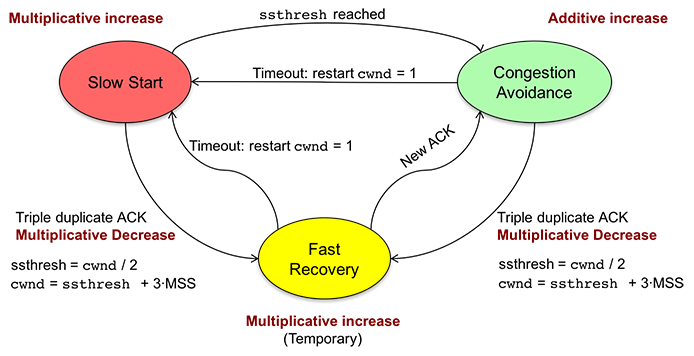

TCP operates in one of three states: Slow Start, Congestion Avoidance, and Fast Recovery.

If we start with a congestion window of one MSS and increase it linearly, it can take a long time before we reach an effective transmission rate. TCP Slow Start prevents this slow ramp at startup by increasing the cwnd size exponentially. The congestion window starts at one MSS and increases by one MSS with each received ACK, causing it to double every RTT. Slow Start starts off slowly but speeds up quickly. It continues to increase until cwnd reaches a threshold level, called ssthresh (slow start threshold). Then the protocol switches to Congestion Avoidance. Initially, ssthresh is effectively not set (set to a maximum value), so the rate of transmission continues to increase exponentially until a transmission times out waiting for an ACK. At this time, the protocol sets the threshold, ssthresh, to one half of the window size that resulted in the RTO and restarts the Slow Start process. This second time, it will ramp up to the threshold and then switch to the Congestion Avoidance state (unless packet loss occurs).

Congestion Avoidance is the normal state for TCP. The state is entered after Slow Start reaches its threshold, which is one half the last window size that experienced an RTO due to packet loss. Congestion avoidance continues to increase the window size (cwnd) but does so linearly: one MSS per RTT. This continues until one of two events occur. If an RTO occurs, then ssthresh is set to half of the cwnd (half of the current window size when the packet loss occurred), cwnd is set to one MSS, and the protocol moves to the Slow Start state. If three duplicate ACKs are received then instead of going all the way back to cwnd=1 and a Slow Start, the protocol switches to Fast Recovery.

Fast Recovery is entered only when three duplicate ACKs are received. The receipt of three or more duplicate ACKs is a sign that we lost a segment but data is still flowing between the sender and receiver since each of those ACKs was generated when a segment was received. They are duplicates because one needed segment was not received. Fast Recovery assumes that cwnd is the estimated system capacity. Instead of reducing data flow abruptly by going into Slow Start, ssthresh and the congestion window are cut to half of their current size (this is a multiplicative decrease). Fast Recovery loops, picking up every duplicate acknowledgement it receives and increases cwnd by 1 MSS each time it does so. This includes the three duplicates that caused TCP to enter this state. Once a non-duplicate acknowledgement is received, cwnd is set back to the threshold, ssthresh, and the state is switched to Congestion Avoidance (additive increase). Should a retransmission timeout occur, the same thing happens as anywhere else that an RTO occurs: ssthresh is set to half the window size, cwnd is set to one MSS, and TCP switches to the Slow Start state.

Summary of TCP congestion control

TCP congestion control is always operating in one of three states.

Whenever the congestion window, cwnd, is below the slow start threshold, ssthresh, the protocol is in the Slow Start state and the window increases exponentially.

Once cwnd reaches ssthresh, TCP enters the Congestion Avoidance state and grows linearly.

Whenever three duplicate ACKs are received, ssthresh and cwnd are halved and all duplicate ACKs are picked up before going back to the Congestion Avoidance state. Should an RTO occur in any state, ssthresh is set to cwnd/2, cwnd is set to one MSS, and TCP switches to the Slow Start state.

Network Layer

We looked at the transport layer (layer 3), which allowed applications to communicate with other applications over logical communication channels. The transport layer sits on top of the network layer (layer 4). The network layer provides host-to-host communication and is responsible for routing packets (called datagrams at the network layer) from a source host to a destination host.

A route is the path that a packet takes through the network. Routing is the process of moving the packet along the route. Routing algorithms figure out the route that the packet will take. A router is a host that forwards packets from an incoming link to a specific outgoing link as determined by the route. Forwarding is the process that a router uses to transfer a packet from an incoming link to the specific outgoing link. A router consults a forwarding table (also known as a routing table) that uses information in the packet headers to determine the outgoing link. The forwarding table is configured by routing algorithms.

Ideally, we might expect a variety of guarantees from the network. These include:

- Guaranteed (lossless) datagram delivery

- Time limits on datagram delivery

- In-order datagram delivery

- Guaranteed constant end-to-end bandwidth or the ability to offer a specific minimum bandwidth

- The ability to specify maximum jitter. Jitter is the variation in the latency of packet delivery.

- Security services, such as authenticating the source and destination as well as encrypting contents through the network.

The Internet Protocol, IP, gives us none of these. It provides best effort packet delivery but makes no guarantees on the reliability of delivery, bounds on delay, jitter, or packet order. Other network technologies, such as ATM (Asynchronous Transfer Mode), provide some of these capabilities. ATM is a virtual circuit (VC) network that provides logical connections at the network layer. All routers in the path are involved in setting up and maintaining the connection. For example, ATM’s CBR (Constant Bit Rate) service allows the connection to request a specific constant bandwidth and specify constraints on jitter and packet loss. The network will also guarantee in-order delivery.

A datagram network, such as IP, provides connectionless service at the network layer and relies on the transport layer to provide connection-oriented service. Only end hosts are involved in providing transport-layer service; the network layer itself is oblivious.

Virtual Circuit Networks

Before examining datagram networks, we’ll take a quick look at virtual circuit networks. Unlike datagram networks, virtual circuit networks require a connection setup phase where an end-to-end route is established and each router along the path agrees to participating in the path and commits necessary resources (e.g., buffers for queues) to ensure it can deliver the desired level of service being requested.

A host that initiates a connection request for a virtual circuit (a communication channel) identifies the virtual circuit with a number. As the path for a virtual circuit is set up, each router enters the input port/output port mapping for that path in its forwarding table and designates a virtual circuit number for the outgoing link (easier than allocating a virtual circuit number that may need to be unique globally). Unlike datagram routers, virtual circuit routers need to maintain connection state information. For communication, each packet only needs to contain a virtual circuit number. There is no need to specify the source or destination addresses since each forwarding table can look up the incoming interface and virtual circuit number, find the outgoing interface and change the virtual circuit number for the next link.

Datagram networks

With routers on datagram networks, there is no a priori setup of the route from source to destination. Indeed, the route may change during a communication session. Each datagram must be identified with the destination address of the endpoint. A router uses this destination address to forward the packet to the next network link. A forwarding table on a router allows it to determine the outgoing interface for a given datagram. IP addresses are 32 bits long (for IPv4; IPv6 addresses are 128 bits long). That gives 232 (or 2128 possible addresses. It is not feasible to have a table of over four billion entries. Instead, a forwarding table is based based on matching a prefix of a number of most significant (leftmost) bits in the address. The fewer bits in the prefix, the more addresses are matched for that prefix. Since the forwarding table may have a mix of longer and shorter prefixes, it uses a longest prefix matching rule so that longer, more specific, prefixes are tested prior to shorter, more general, prefixes.

Router Architecture

An IP router comprises two part: a control plane and a data plane. The control plane is responsible for the high-level software of the router. It runs a routing processor that implements the user interface, runs routing protocols, populates forwarding tables, implements the ICMP protocol, and controls queue behavior.

The data plane is responsible for packet forwarding. Its purpose is to move packets from the input port to the output port on a router as quickly as possible. Because of the need to move as manay as tens of millions of packets per second per port, the data plane is generally implemented in hardware. Note that on a router, a port refers to the input and output interfaces and has nothing to do with the use of the term port at the transport layer. A line card is the hardware that is responsible for implementing the input and output ports for a specific interface (e.g., an Ethernet interface). Because the router operates at layer 3 (the network layer), the data plane must process layers 1, 2, and 3 of the protocol stack:

- At layer 1, it has to retime and regenerate the signal at the output port.

- At layer 2, it has to create the new datalink headers and checksums for transmission onto the selected output port

- At layer 3, it has to extract the destination address, search the forwarding table to determine the output port, decrement a time-to-live count, regenerate a checksum, and forward the datagram to the output port.

The input port of a router implements the link-layer protocol to accept incoming packets (frames) on the physical interface of the line card. It decapsulates (extracts the data encapsulated in the frame) the layer 3 datagram to get the IP packet, validates the protocol version number, and updates the packet’s time-to-live (TTL) field. If the TTL field reaches 0, the packet is dropped and a message is sent to the routing processor in the control plane to send back an error packet. The input port then performs a lookup in the forwarding table (using a longest prefix match) to determine the required output port to which the packet needs to be delivered.

The output port of a router accepts outbound datagrams and encapsulates them with the appropriate link-layer headers (e.g., Ethernet). Like the input port, it implements the link-layer protocol to transmit these outgoing packets (frames) on the physical interface of the line card.

A packet is delivered from the input port to the output port via the router’s switch fabric. This is a general term for the architecture that allows the movement of packets between the line cards. This packet delivery may need to be delayed if the switch fabric cannot currently accept the packet or if another input port is currently moving data to that same output port. In that case, the packet will need to wait in a queue at the input port.

A router will have queues at both input and output ports. The output port maintains a queue of packets received from the switch fabric and transmits them using the link-layer protocol for the outbound interface.

Queues, of course, are finite in size and have the risk of overflowing and therefore causing packet loss. If the queue at the output port is full, there is no room for the packet and it will have to be dropped or some other packet in that queue will have to be deleted. The simplest algorithm is first come, first served (FCFS) queuing. A more sophisticated one may place a priority on the source, destination, protocol, or even a service level that may be embedded in the packet. Active Queue Management (AQM) refers to the algorithm in place to make the decision of which packet gets sent next and which packet gets dropped if the queue is full.

At the input port, packets may be queued if they cannot be forwarded to the output port quickly enough. Queuing is susceptible to head-of-the-line blocking. If a packet cannot be immediately forwarded to an output port (typically because that port or switching fabric is in use by another line card), not only is the packet delayed but all the packets queued behind it are blocked.

There are several router architectures and the choice of design largely depends on cost and the performance needs of packet forwarding. Every one of these architectures is in use.

- Conventional shared memory

- A conventional shared memory design is not different from that of a PC with each input/output pair of ports functioning as an input/output device. An incoming packet at a port generates a system interrupt. The operating system copies the packet from the transceiver to the system’s memory. The processor runs a network stack whose network layer searches the routing table to determine where the packet needs to be forwarded. The packet then travels back down the stack onto the desired output port. The limitation of this design is that only one memory operation can take place at a time. Moreover, the single CPU and system bus can become bottlenecks.

- Shared memory with distributed CPUs

- To alleviate the CPU bottleneck, this design incorporates a CPU on each line card. This gives each line card the intelligence to process the three layers of the stack, and determine the destination port of the packet without having to make use of the central CPU, shared bus, or main (shared) memory. The central CPU is responsible for the control plane: providing the administrative interface and creating the forwarding table. It pushes a copy of the forwarding table onto each line card. The shared memory and shared bus are used to allow the processor on one line card to copy the packet to another line card. The fact that the bus is shared and only one memory operation can take place at a time in shared memory can still result in a bottleneck for moving packets between line cards.

- Shared bus, no shared memory

- To alleviate having to use shared memory as an intermediate buffer in copying packets, this design allows one line card to send a packet directly to the memory of another line card using a common shared bus. The shared bus is now the performance bottleneck.

- Switched (crossbar) data path

- The final variation of architectures removes the shared bus and replaces it with a crossbar switch. This is a considerably more expensive option but allows one line card interface to switch directly to another line card interface without affecting the ability of other line cards to communicate.

Network Layer: Internet Protocol

The Internet Protocol (IP) has three components:

The IP protocol itself, which deals with addressing hosts, formatting datagrams, fragmenting and reassembling datagrams, and forwarding datagrams through routers.

Routing protocols, which determine network connectivity and how forwarding tables are configured at routers

The Internet Control Message Protocol (ICMP), which is a network-layer protocol for error and status reporting.

The IP datagram

The IP datagram comprises a 20-byte header, a variable-size options field after the header, and the payload, which will typically be the TCP or UDP segment. It contains a 32-bit source IP address, which identifies the sender, and a 32-bit destination IP address, which identifies the recipient.

A time-to-live (TTL) field is a counter that is designed to keep packets from circulating indefinitely in the network case forwarding tables accidentally create cycles. An IP datagram is typically initialized with a TTL of 60 or 64 and the TTL is decremented by one each time it enters a router. If the TTL reaches zero, the router will discard the packet.

A protocol field in the datagram identifies the higher-layer protocol that is contained within the data. Common values are 6 to identify the data as a TCP segment and 17 to identify the data as a UDP segment.

A header checksum field contains a 16-bit header checksum. This is calculated with the same formula as UDP and TCP checksums. Only the IP header is checksummed. A router has to recompute the checksum since the TTL field (and possibly the options field) will change with each network hop.

Fragmentation and reassembly

If a router needs to forward a packet to a link that has a smaller MTU (maximum transmission unit) than the incoming link, it is possible that the IP packet may be too large to be transmitted as a single frame (packet) on the outgoing link. To handle this situation, IP supports fragmentation. If a packet is bigger than the MTU of the outgoing link, a router can split the datagram into two or more fragments. Each fragment is a separate IP datagram with its own IP header. When the fragments reach their ultimate destination, the receiving host must reassemble them into a complete packet before passing them to the transport layer.

Fragmentation is controlled by two data fields and two one-bit flags in the IP header. A don’t fragment (DF) bit tells a router that fragmentation is not permitted on a datagram. This may result in the inability to route the datagram. If a router makes the decision to fragment the datagram, the datagram is split into two or more fragments. Each transmitted IP datagram contains an identification number in the identification field of the IP header. This is set when the original datagram is created and is typically an incrementing counter for each successive datagram. When a datagram is fragmented, the IP header of each datagram holding a fragment contains the same ID number. This tells the receiver that those datagrams are part of the same original datagram. Each fragment also contains a 13-bit fragment offset. This is a number that is multiplied by eight to indicate where the data in this fragment belongs in the reassembled datagram. The first datagram contains an offset of zero. Each datagram fragment except for the last one has a more fragments (MF) bit set to one. The last fragment will have MF=0 and the fragment offset along with the IP length field will indicate the length of the final reassembled datagram.

IP addressing

Our discussion focuses on IP version 4, which is the most widely deployed version of IP. IPv4 addresses are 32-bits long. Every interface on an IP network must have a unique IP address. If a host has two interfaces (e.g., Ethernet and 802.11 links), it will have one IP address for each link. If a router has 128 ports, it will have 128 IP addresses.

We earlier discussed that it would be impractical for addresses to be randomly assigned as each router would have to have to be able to look up an individual address in a forwarding table of over four billion addresses. Moreover, routing algorithms would need to manage information about the route of every single address on the Internet. Instead, groups of adjacent addresses are assigned to an organization. Rutgers, for example, has been assigned all addresses with the top 16 bits of 128.6. A router would need to know where to forward anything that starts with 128.6 rather than maintain a table of all the 216 (65,536) possible addresses that may start with 128.6. This ability to use one prefix to refer to a route that may span multiple sub-networks or hosts is called route aggregation.

A subnet (also called a subnetwork or a network) is a group of adjacent IP addresses that share a common prefix and are assigned to an organization. A subnet makes up a logical network that is connected to a router. For example, routers on the Internet needs to know how to route an address starting 128.6 to Rutgers. Subnets are expressed in CIDR (Classless Inter-Domain Routing) notation, whose format is a 32-bit IP address that comprises the identifying bits of the subnetwork followed by a slash an the number of bits that identify the subnetwork. For example, 128.6.0.0/16 means that the top (leftmost) 16 bits of the address 128.6.0.0 identify the subnetwork. The subnetwork logically divides an IP address into a network part (the bits that make up the subnet) and the host part (the bits that identify the host within the subnet).

A subnet mask (also called a netmask) is a bit mask that contains ones in the positions of the network bits of the address. For Rutgers, this means the top 16 bits will be one, resulting in a subnet mask of 255.255.0.0. A subnet mask is used to strip the host bits from the address to match prefixes in a forwarding table.

Subnetworks are hierarchical. An Internet service provider (ISP) will often be assigned large blocks of IP addresses by a Regional Internet Registry (RIR). Routers between ISPs will need to know which block of addresses is handled by which ISP. A specific ISP will allocate smaller blocks of IP addresses to organizations or lower-tiered ISPs. This is not relevant information outside of the ISP since outside routers only need to know how to reach one of the ISP’s routers. Routers within the ISP need to route to the organizations that were allocated those addresses. This process can continue iteratively. Within Rutgers, for example, are multiple networks that use blocks within the 128.6.0.0/16 allocation. For instance, the host aramis.rutgers.edu has an address of 128.6.4.2 and a netmask of 0xffffff00. This indicates that it is in a subnetwork that is defined by the prefix 128.6.4.0/24.

IP supports several special addresses: bit patterns that cannot be used as generic host addresses. An address of 255.255.255.255 represents a limited broadcast address. This is a broadcast address for the host’s network. Datagrams directed to this address will be delivered to all hosts on the directly-connected network but routers will not forward them to other networks (they are limited to the same local area network as the sender). An address with only the host bits set to one (e.g., 128.6.255.255) represents a directed broadcast address. Datagrams directed to this address will be routed to the specified subnet (if the router permits it) and delivered to all hosts on that subnet (they are directed to a specific subnet). Routers may be configured to forward these datagrams to ensure that they are delivered to subnets outside the directly-connected local area network.

Host configuration

A regular host on the Internet needs to know a few key parameters:

- Its IP address, so it can identify itself in the source address field of an IP header.

- Its subnet mask. Using the subnetmask along with the IP address, it can identify its own subnet and hence identify which addresses are on the local subnet and which ones need to be directed to a router.

- Its gateway. This is a router on the LAN and the default address for non-local addresses that are not in a host’s local routing table. A gateway is a simple router that routes datagrams between the LAN and another network.

- One or more domain name servers. It needs to know the address of at least one name server so that it can look up Internet domain names and find corresponding addresses.

These four parameters can be configured manually. Alternatively, the Dynamic Host Configuration Protocol (DHCP) can be used to do this automatically.

DHCP is a protocol to allow a client to get an IP address for itself as well as essential network configuration parameters. The challenge with developing such a protocol is that it has to work before the client has a valid address on the network. Hence, a conventional request-response protocol with source and destination addresses will not work. A requirement for DHCP is that the DHCP server has to be running on the same local area network as the host. If not, a DHCP Relay Agent must run that serves as a proxy and forwards requests and responses to the remote DHCP server.

DHCP uses limited broadcast messages (255.255.255.255). A client is allowed to send a limited broadcast and is capable of receiving one even if does not have an address assigned. DHCP works in four steps, with an acronym of D-O-R-A to describe them.

Discover. The client sends a limited broadcast DHCP Discover UDP message to port 67. This contains a random transaction identifier.

Offer. The server listens to broadcasts coming in on port 67. It gets the Discover message and responds back by sending a limited broadcast DHCP Offer UDP message to port 68. The response contains the following parameters:

- Matching transaction identifier

- Proposed IP address

- Subnet mask

- Lease time

Request. The client picks up the server’s Offer message. It compares the transaction identifier to ensure that the offer is not directed to another client. If there have been multiple DHCP servers and it received multiple offers, it selects the one it wants to accept and ignores the others. The client responds with a Request message that contains a copy of the parameters in the Offer.

ACK. The server associates the offered parameters with the host and sends back a DHCP ACK message acknowledging the association. The client can now configure its network with those parameters.

DHCP can be used in several scenarios:

Automatic allocation. DHCP can be used to assign a permanent IP address to a host.

Dynamic allocation. DHCP can be used to lease an address to a host. The host may use the address for a specified period of time. This allows the reuse of an address after it is no longer needed by the host. A Wi-Fi hotspot is a common example of this use of DHCP.

Manual allocation. An administrator can configure the DHCP server to assign a specific address in response to a DHCP Discover message. This is done by associating the host’s link layer address (e.g., Ethernet MAC address) with a specific IP address.

Network Address Translation (NAT)

In order to move datagrams between hosts on the Internet, each host interface needs to have a globally unique IP address. If this is not the case, routers will not be able to route the packet to that interface. The need for this, of course, creates a huge need for IP addresses. An organization with 10,000 hosts would need 10,000 IP addresses.

Network Address Translation (NAT) addresses this problem by allowing an organization to create a private IP address space within the organization while presenting one, or a small set of IP addresses to the outside Internet. As a packet flows through a NAT-enabled router, the router uses a NAT Translation Table to map a source {private-address, port1} to a {public-address, port2}. When packets flow back to the router from the outside, the router uses the NAT Translation Table to perform the inverse mapping of {public-address, port2} to {private-address, port1}.

To enable NAT, the gateway router has to look at, and possibly modify, the transport layer header since since a source port number may need to be changed to one that is not used by any other internal-external mapping at the router.

The private address space within the organization must contain a range of addresses that are not used by any hosts on the public Internet. Otherwise, there would be ambiguity as to which host is being addressed and where it is located. Hence private addresses are non-routable on the Internet and can only be used in internal networks. RFC 1918 defines three address blocks that can be used for these addresses.

Hosts in a NAT environment cannot accept incoming packets unless a host/port mapping has been established by an outgoing packet. As such, NAT is not particularly useful for servers but is incredibly useful for client machines.

ICMP