Access Control

Thinking about Security

Paul Krzyzanowski

October 13, 2024

Introduction

An critical function of an operating system is protection: controlling who is permitted to access various system resources and what they are allowed to do. Protection is the mechanism that enforces security policies.

An operating system is responsible for giving programs access to the resources they need when they run. Different users will have different access privileges to files, devices, and other system resources and the operating system must enforce these access privileges. Users should not be able to read or modify data if a policy prohibits them from doing so. Processor time and system memory are also finite resources that need to be allocated across all processes in a fair manner that conforms to established policies.

Access control deals with the mechanisms and policies that define how users (subjects) can interact with system resources (objects), such as files, directories, devices, and system processes. The goal of access control is to ensure that only authorized users are allowed to perform specific actions on the system’s resources, based on policies.

Protection is a broader term that covers all mechanisms for defending a system against unauthorized access or mistakes. This includes access control and also other security measures such as encryption, authentication, data integrity checks, and safeguarding against threats like viruses and malware.

To enforce security policies and protect resources, we need to know who they apply to. This requires identifying, authenticating, and authorizing the user. Authentication is the process of getting and validating a user’s identity. For example, users might identify themselves with a user name and then authenticate themselves with a password. After that, the system can authorize the user’s access to a desired resource. Authorization determines whether an authenticated user is permitted access to a resource and is based on the security policy. A policy is the definition of what is or is not permissible in the organization. A protection mechanism enforces security policies.

Authentication associates a user name to a user ID, a unique numeric identifier. The operating system associates each running process with a user ID. Not all users correspond to humans; some are system processes and may have one of several IDs covering different system services (e.g., a web server or database). A process run with the authority of the user that executed it (we’ll see there can be exceptions to this but they will have to be explicit) – the user ID is part of the context of the process. The operating system’s protection mechanism enables different users to have different levels of access to resources, an ability to run specific programs, and in some cases, to run certain programs with privileges other than those of the user.

At the operating system level, protection encompasses several components:

- User accounts:

- Users need to identify themselves and be authenticated so the system can have assurance of the integrity of the user and apply appropriate policies to the user’s requested actions.

- User privileges:

- Each process run by the system or by a user runs with specific privileges based on the user ID of the process that define access rights – what resources the process can and cannot access. These resources are usually files, but they can also be devices or communication interfaces1.

- Scheduling:

- The operating system is responsible for scheduling processes and may prioritize certain users for the programs they run over others. The scheduler allocates CPU time across all processes that are ready to run and tries to do so in a fair manner. Some processes may also need to run with a higher priority to service certain system events or real-time tasks effectively. No process should monopolize the processor, which leads to starvation among other processes.

- Quotas:

- Some operating systems also support quotas: limits on how much file system space, memory, or CPU a process is allowed to use. These limits must also be enforced.

The evolving need for access control needs

The very earliest computers did not have a need for access control. They were single-user systems that ran one program at a time (batch processing). The program and data were physically brought over to the computer, usually in the form of punched cards and magnetic or punched paper tape. System administrators were responsible for mounting the proper tapes, loading the cards, and running the requested programs.

Once disks came onto the scene, computers had access to files that were always available: a system administrator no longer had to retrieve and attach the media for a user’s program. Now, privacy (confidentiality) and integrity became concerns. You did not want somebody else reading or modifying your files unless you gave them explicit permission. In short, waned want to protect yourself from curious users, malicious users, and accidental mistakes.

Later, interactive timesharing systems came onto the scene, and we had an environment where multiple processes from multiple users could run concurrently. This illusion was created by rapidly switching the processor’s state between different programs: each would run for a few milliseconds before another process could run. This environment made access to files easier and allowed multiple processes to access the same file simultaneously. Malicious software, for example, could keep a file open and monitor its changes – or make changes. Access control was necessary. We needed operating system mechanisms to enforce defined policies on who could access what.

When PCs took over the world, access control took a back seat for a while. We were back to single-user systems: you are the sole user and administrator of your PC; every file is yours. However, we soon realized that software became less trusted. You now had to worry about whether that game you installed would modify your system files or upload your private files to a remote server. Moreover, you still had to worry about accidents and misbehaving programs. It turned out that access control was still important. Now we live in a world of PCs, mobile devices, IoT2 devices, and remote servers – cloud computing – where we again share computers and storage. We also have traditional time-sharing systems, such as university computers and corporate servers. Program isolation and access control is still crucial.

Access control

Access control is about ensuring authorized users can do what they are permitted to do … and no more than that. In the real world, we rely on keys, badges, guards, and defined rules for enforcing access control: policies. In the computer world, we provide access control through a combination of hardware, operating systems, and policies.

We also have environments where software provides services via a network. In these environments, software that receives network requests often has to implement its own policies to decide whether to carry out those requests. This includes web servers, databases, application servers, and other multi-access software. This environment is challenging from a security point because we no longer have centralized mechanisms or policies: it is up to each implementation to build their own.

Access control and the operating system

In its most basic sense, an operating system controls access to system resources. These resources include the processor, memory, files and devices, and the network. Most critically, the operating system needs to protect itself from applications. If it fails to do so, an operation may, accidentally or maliciously, destroy the integrity of the operating system. A simple programming error could freeze the entire system or interfere with other programs. This kind of thing happened frequently in early versions of Microsoft DOS and Windows. The operating system and the underlying hardware are fundamental to the trusted computing base.

Applications must also be protected from each other so that one application cannot read another’s memory (enforce confidentiality) or modify its code or data (enforce integrity).

We also need to ensure that the operating system remains in control of the environment. A process should not be able to control the computer and keep the operating system or other processes from running.

Hardware timer

To make sure the operating system can always regain control, it relies on a programmable hardware timer. Traditionally, the operating system would request a periodic stream of interrupts, such as 100 per second. In an effort to be more power efficient, some operating systems now control the interrupts dynamically by setting the timer to go off at a specific future interval. In either case, when the timer goes off, it generates a hardware interrupt that forces the processor to switch execution to a specific location in the kernel. This ensures that the operating system can always regain control of the processor and a process cannot prevent the operating system from doing so.

Applications cannot disable this timer. No matter what a process is doing, at some point in the near future, execution will jump to this interrupt handler that the operating system configured. This periodic interrupt is the basis of preemptive multitasking. Some operating systems in the past, such as the earliest versions of Microsoft Windows (before Windows 95), implemented cooperative multitasking, where a process had to relinquish control explicitly.

Process scheduler

The timer forces a periodic change of control to the operating system kernel. This allows the kernel to examine processes in execution and make scheduling decisions. Specifically, the operating system decides whether the currently running process(es) used their fair share of the CPU Or whether it is time to give another process the chance to run for a while.

An important part of the scheduling algorithm is to avoid starvation, the phenomenon when certain processes never get a chance to run. If it were possible for a process to keep others from running, that would be an availability attack.

The scheduler attempts to prioritize threads based on some concept of “fairness”. This priority could be based on the user, a user-defined priority, the interactivity level of the process, real-time deadlines, or the process' past use of the processor. Often, it is a combination of these factors. A “fair” scheduler will provide graceful degradation of performance as more processes are run, ensure all get a chance to run, and not allow one process to adversely affect the execution of others.

Memory management unit

Most modern processors3 have a memory management unit (MMU) as part of their architecture. This enables the kernel to provide each process with virtual memory: the illusion that the process has all the memory to itself. A memory reference gets translated into a physical address in real memory.

Because each process has its own address space (defined by a per-process page table), one process cannot access another process’ memory. With this mechanism, the operating system can provide complete memory isolation between processes. The virtual memory address space is divided into equal-size chunks, called pages. Each page table entry indicates the location of the page in physical memory as well as its access rights. The operating system can define access rights for each page, such as read-only, read-write, or execute. A page table entry may also be unmapped, which indicates there is no physical memory mapped to that location.

The operating system configures one page table per process, filling in pages in the table to point to the page frames in physical memory that was allocated to hold each page. Prior to running a process, the operating system’s scheduler sets the MMU to use that process’ page table. In Intel architectures, this is done by setting the cr3 register to the base address of the page table. So long as the operating system doesn’t configure the page tables of two different processes to point to the same page frames, one process will be unable to access the memory of another.

Kernel mode

Clearly, there are special operations that the operating system needs to perform. For instance, a process should not be able to change its memory map simply by creating a new page table and setting the processor’s cr3 register to point to this new page table. That would allow it access to any part of physical memory, including that used by the operating system and other processes.

Processors guard against this by supporting a special mode of execution called kernel mode (also called privileged, system, or supervisor mode).

This mode is called kernel mode because it is active when the operating system kernel is run. When the processor runs in kernel mode, it can access regions of memory that may have been restricted, modify the page tables and the processor’s page table register, set timers, define interrupt vectors (e.g., direct where CPU execution should go if a timer goes off or an ethernet packet is received), and even halt the processor.

Normal processes, even those with elevated privileges, should not be allowed to access these special instructions since it would allow them to subvert the integrity of the kernel or other processes. They run in user mode. A process can switch from user mode to kernel mode execution in one of three ways:

A trap instruction, which is also called a software interrupt4. This is the technique used to execute system calls and processors often provide a

syscallinstruction that takes care of passing parameters. A trap instruction acts as a subroutine call: it pushes the current address onto the stack, changes the processor to execute in kernel mode, and jumps to an address that’s specified by an offset of the interrupt number in an interrupt vector table. The interrupt vector table is stored in protected memory and initialized at boot time to ensure that all the interrupt vectors jump to a location in the kernel. When the kernel is done processing the interrupt, it can issue an return instruction that will pop the address from the stack, switch the processor back to user mode, and continue execution back in the user program.A program violation, such as accessing an unmapped are area of memory, attempting to execute code from a memory location that does not have execute privileges, or attempting to execute a privileged instruction. These violations act the same as a trap instruction.

A hardware interrupt, such as a timer expiration, power button press, or the receipt of a packet. These interrupts also map to offsets in the interrupt vector table.

Rings of protection

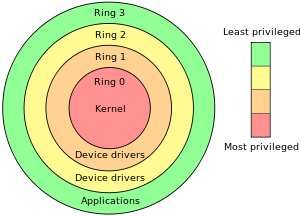

from https://en.wikipedia.org/wiki/Protection_ring

Today’s operating systems support these two modes of operation: user and kernel. Kernel mode has all the privileges while user mode has restricted privileges. However, there is no reason that only two modes of privilege need to exist.

When Multics, the predecessor of Unix, was designed, it had a ring structure of six5 different privilege levels. Each ring was protected from higher-numbered rings and the only way code running in a higher-numbered ring could access code in a lower-numbered ring was by making a special call to cross rings. These calls, with well-defined entry points, are called call gates. These behaved similar to software interrupts: you can only call specific locations rather than jump anywhere in another ring. Only the lowest-level, most-secure functions ran in ring 0. The rest of the kernel ran in higher rings and user processes ran in even higher rings.

The idea behind multiple rings was that the entire operating system should not run in the most privileged ring, ring zero. Intel architectures adopted and implemented this idea of rings. Intel’s last 16 bit and all 32- and 64-bit processors support four rings of protection.

Critical parts of the kernel would run in ring 0. Device drivers are still part of the kernel but should never need to do things like reconfigure the page table or modify the scheduler. They would run in rings one and two. User programs would run in the least privileged ring, three.

However, all modern operating systems only use two rings: ring 0 for the kernel and ring 3 for user applications. It’s also not desirable to design a system to use more rings since that will make it difficult to port the operating system to non-Intel architectures, such as ARM.

The introduction of hypervisors for virtual machines, which allow multiple operating systems to run concurrently, makes a good case for a level of protection even more privileged than the operating system kernel. It was disruptive to move operating system kernels to operate in a higher ring. Instead, a new ring, more privileged than ring zero was created. It’s ring -1 and is used for running the hypervisor, the virtual machine monitor. Kernels still run in ring 0 and user programs run in ring three. Rings one and two are not used in today’s systems.

Subjects and objects

In order to determine who gets to do what, the first thing that the operating system needs is the user’s identity. Typically, the login program establishes that by getting the user’s credentials, which usually comprise a login name to identify the user and password to authenticate the user. The system then associates a unique user ID number with that user and grants access to resources based on that ID.

When we look at access control, we talk in terms of subjects and objects.

A subject is the thing that needs to access the resources, or objects. Often, the subject is the user. However, the subject can also be a logical entity. For example, you might run the postfix mail server and create a user ID of postfix for that server even if it does not correspond to a human user. Having this distinct ID will enable you to configure access rights for the postfix server that are distinct from other users. You will also likely do this for certain other servers, such as a web server.

Processes run with the identity & authority of some user identifier. This identifies the subject or principal (or security principal). The terms are often used interchangeably but there are slight differences. A subject is any entity that requests access to an object, often a user. A principal is a unique identity for a user. The subject resolves to a principal when you log in; your user ID is the principal. A subject might have multiple identities and be associated with a set of principals. Principals don’t need to be humans. The identity of a program or process may be a principal. We will not worry about the distinction in our discussions and will casually talk about users or subjects – just keep in mind that we really refer to the user ID that the operating system assigned to that subject.

An object is the resource that the subject may access. The resource is often a file but may also be a device, communication link, or even another subject. In most modern operating systems, devices are treated with the same abstraction as files. In POSIX6 systems, the file system namespace contains names and permissions for devices; an attribute tells the system it’s not a data file.

Access control defines allowable operations of subjects on objects: it defines what different subjects are allowed to do or defines what can be done to different objects.

As we will soon see, most operating systems define what can be done with different objects, meaning that permissions are associated with each object.

Modeling protection: the access control matrix

A process should be allowed to access only objects that it is authorized to access. For example, your process might not be allowed to modify system files. It may have access to certain files on a disk but it cannot read the raw disk data since that would allow it to access all content in the file system.

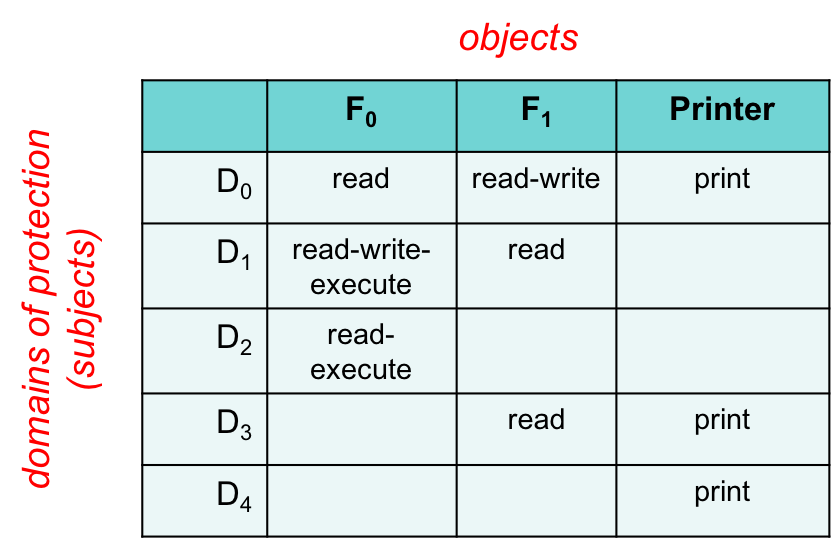

Each process operates in a protection domain. This protection domain is part of the context of the process – the data that the operating system tracks about the state of the process. A protection domain defines the objects the process may access and how it can access them.

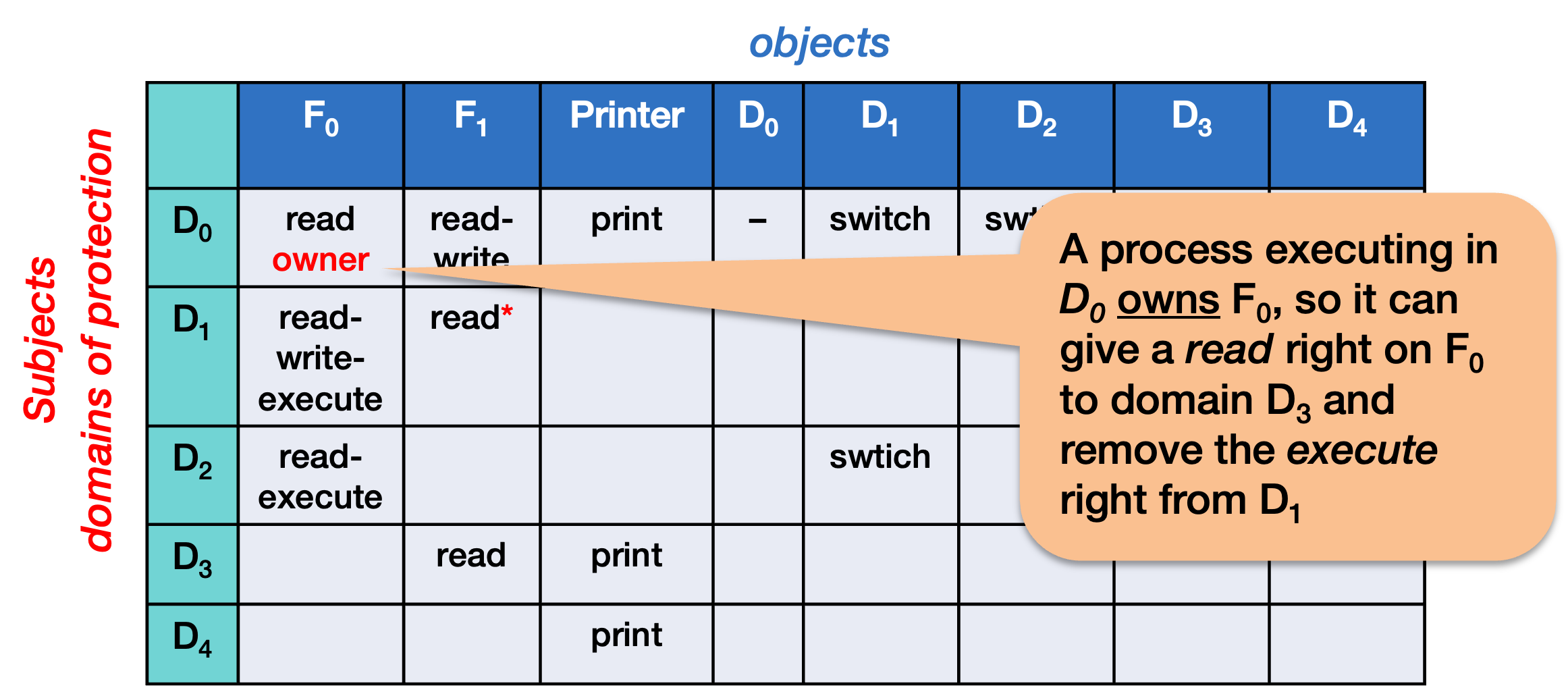

A way we can manage this information is with an access control matrix. In this matrix, each rows represent a domains, which is a subject or a group of subjects. The columns represent all the objects that a subject may try to access. The cell at the intersection of each object and subject contains the access rights that subject has on that object. For example, a user in domain D2 has read-execute access for file F0.

An access control matrix is usually the primary abstraction that’s used when we discuss protection in computer security.

Additional rights

In practice, we will need some controls beyond basic access rights to perform operations on objects.

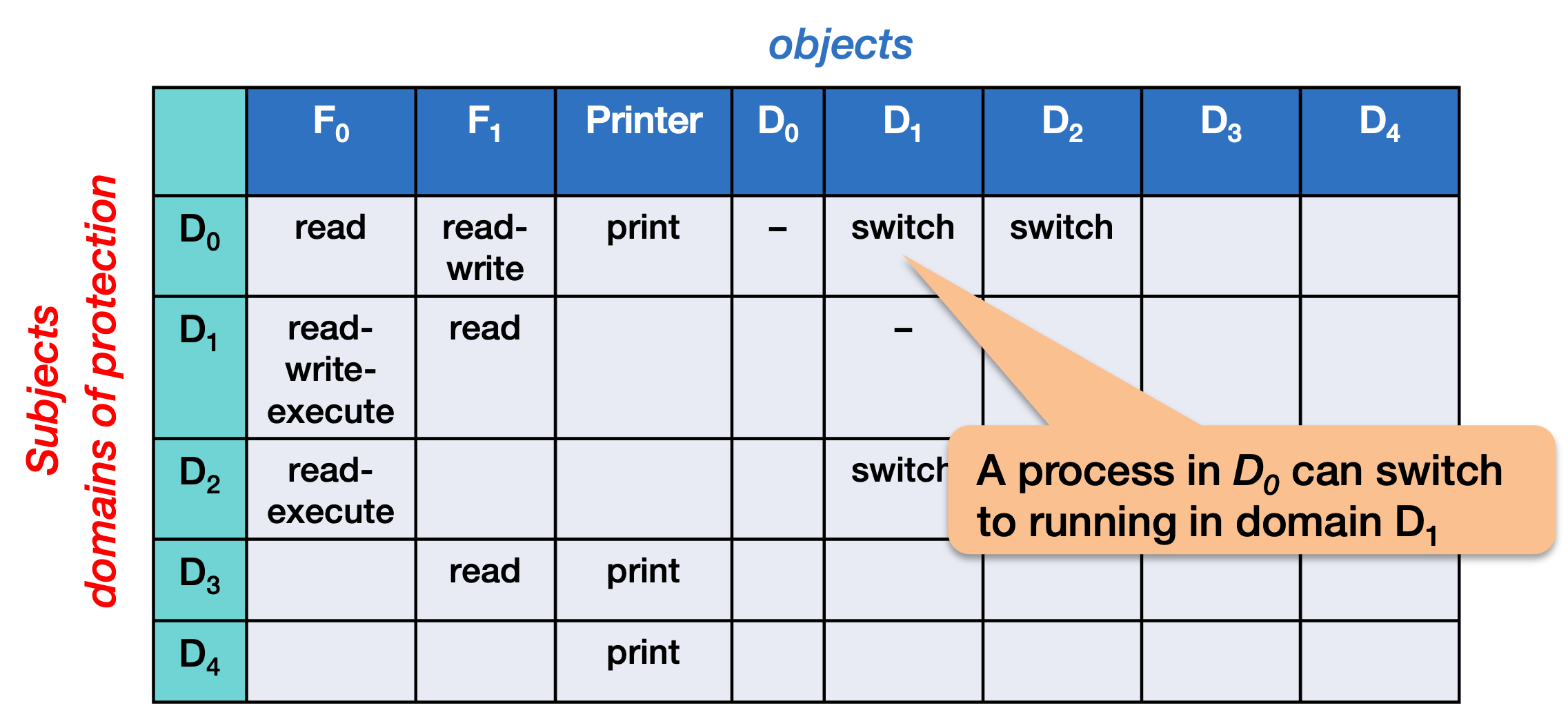

Domain transfers

A domain transfer allows a process to run with the access rights of a different domain. It makes switching from one domain to another a configurable policy.

One reason that a right such as this is important is that makes it easy to implement a login process. The login process can get the user’s credentials, authenticate the user, and then execute the shell (or other startup program) within that user’s domain.

In this example, a process in domain D0 can switch itself to running in domain D1.

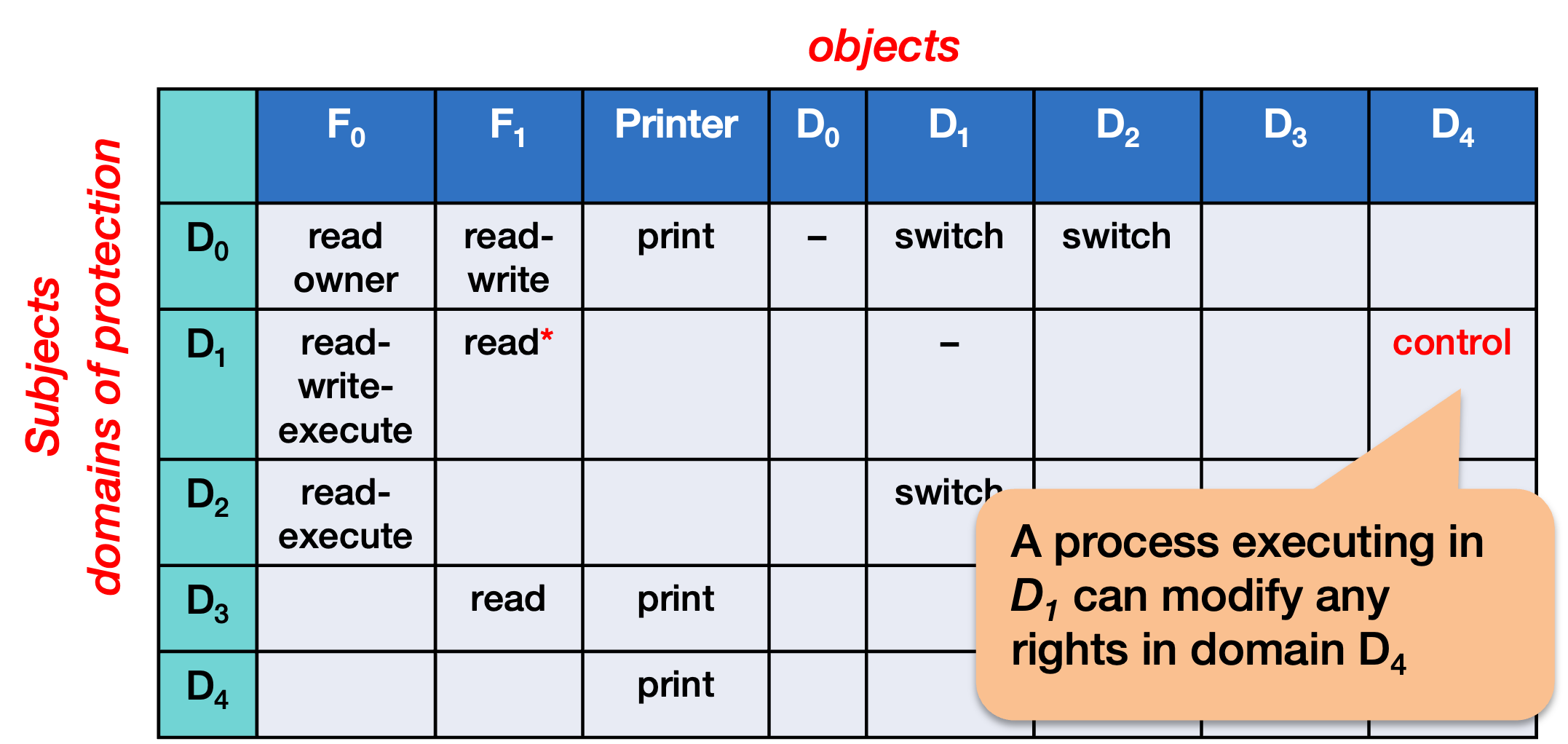

Note that we expanded our access control matrix to include the list of domains as objects as well as subjects. With this expanded matrix, one domain can get permission to do something to another domain.

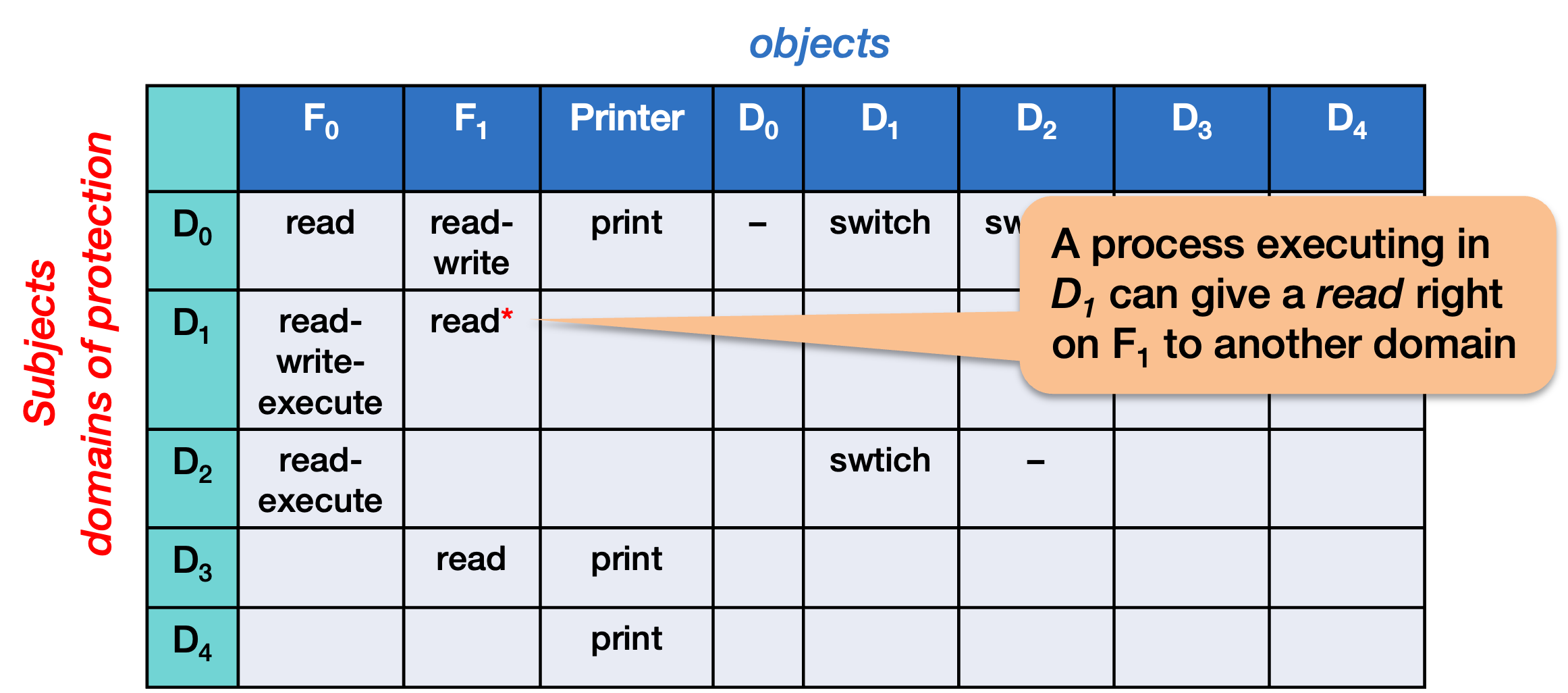

Copy rights

A delegation of access permission, also called a copy right, allows a user to grant and revoke specific permissions to others domains. For example, a process executing in domain D1 can be allowed to give a read right on file F1 to any other domain.

This creates a mechanism where a user might not own a file, but can still be granted the permission of giving other people access to that file.

Object Owner

An object owner is an attribute that identifies a specific domain as owning a specific object. The owner has the power to control how the object is used by itself and others. It can add or remove any rights for that object for any domain.

When you identify a subject as the owner of an object, it will be allowed to set or delete access rights on that object for any other domain (that is, any other row).

In this example, a process executing in D0 owns the file F0, so it can grant a read right on F0 to domain D3 and remove the execute right from D1.

Domain control

We can also enable controls across a row. If the access right a[i, j] contains a control right (Figure 6) then a process executing in domain i can change access rights of any objects for domain j. This domain control permission allows a process running in one domain to be able to change any access rights for another domain. If you have domain control for a specific domain, it means that you have the power to change the permissions for all the objects for that domain.

In this example, a process executing in D1 can modify permissions for accessing objects by domain D4.

Note that these features are generally not available in any one system but are, in theory, possible and the access control matrix provides a framework to express and visualize these operations.

Implementing an access control matrix

The access control matrix is already starting to get messy with domains modeled as objects and. We had to take basic object access permissions and additional rights that apply to domain or object ownership.

Even with these enhancements, an access control matrix may not do everything we may need. For example, if I run a program, it runs with the authority of my domain (my user ID) and can access only the files that I am allowed to access. Sometimes, however, we might want a program to have extra privileges. For example, a program might need to read some configuration files that I shouldn’t have access to. The program author trusts the program to read, and possibly modify, this data but not the user. As another example, consider a database program that must manipulate the files. We don’t want the user to be able to access those files directly. Or a user might need to add data to a print queue, but they don’t own that queue and shouldn’t be able to manipulate it.

Adding domains to the objects' row is not enough to accomplish this. We don’t want the program to run under a new domain and forget who the original user was. For instance, a program that adds data to a print queue should be able to ensure that it only reads files that belong to the user as inputs. A database needs to manipulate its objects with the authority of the user, even if it has extra privileges to modify the underlying files that implement it.

To fully model what we want in this case, we will need a 3-D access control matrix where we specify subjects, objects, and programs. Now we can grant special access to files only when the user is running a certain program. Otherwise, the user cannot access that object. Conversely, one might want to run a program that is not trustworthy and should be granted fewer access permissions.

One solution to get this specific behavior is to give an executable file a temporary domain transfer. When run, the application will assume the privileges of another domain but still remember who the original user is. We will see that this is solved in POSIX systems through the setuid (set user ID) attribute.

Access control lists

Even when a basic access matrix works satisfies most of our needs, implementing it becomes unwieldy. It will lead to a huge table with dynamically changing rows (each time users or groups are added or removed) and objects (whenever files or devices are added or deleted). Even on personal computers, this table can easily contain several billion entries, making it impractical to store and access efficiently.

The access control matrix can easily become far too large to store in memory but we want lookups in to be efficient. We’d like to avoid extra block reads from the disk just to get access permission information for a user on a file. In practice, implementing an access control matrix in a system is not practical.

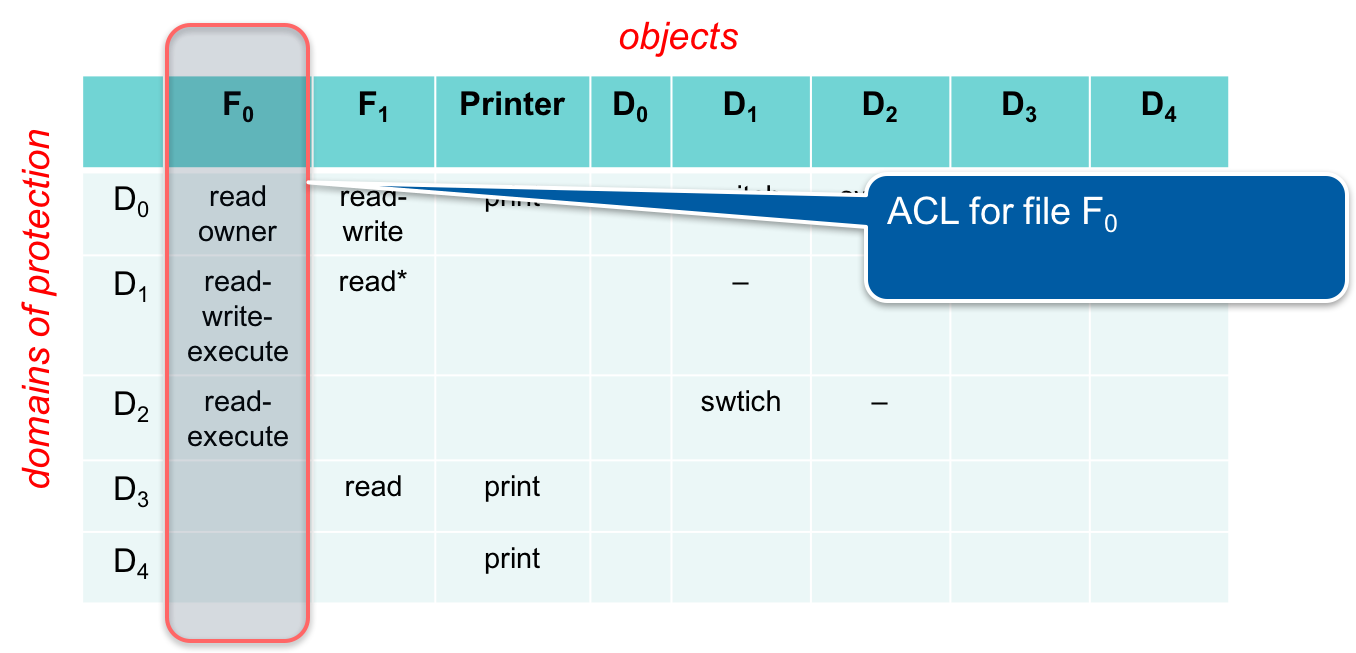

Instead, we can break apart the access control matrix by columns. Each column represents an object, and we can store the permissions of each subject with each object.

When the operating system accesses the object, it also accesses the list of access permissions for that object. Adding new permissions is done on an object-by-object basis.

When we open file F0, we can at that time get the access control list for that file, telling us what each subject is allowed to do to that file. This is called an access control list (ACL). All current operating systems use access control lists.

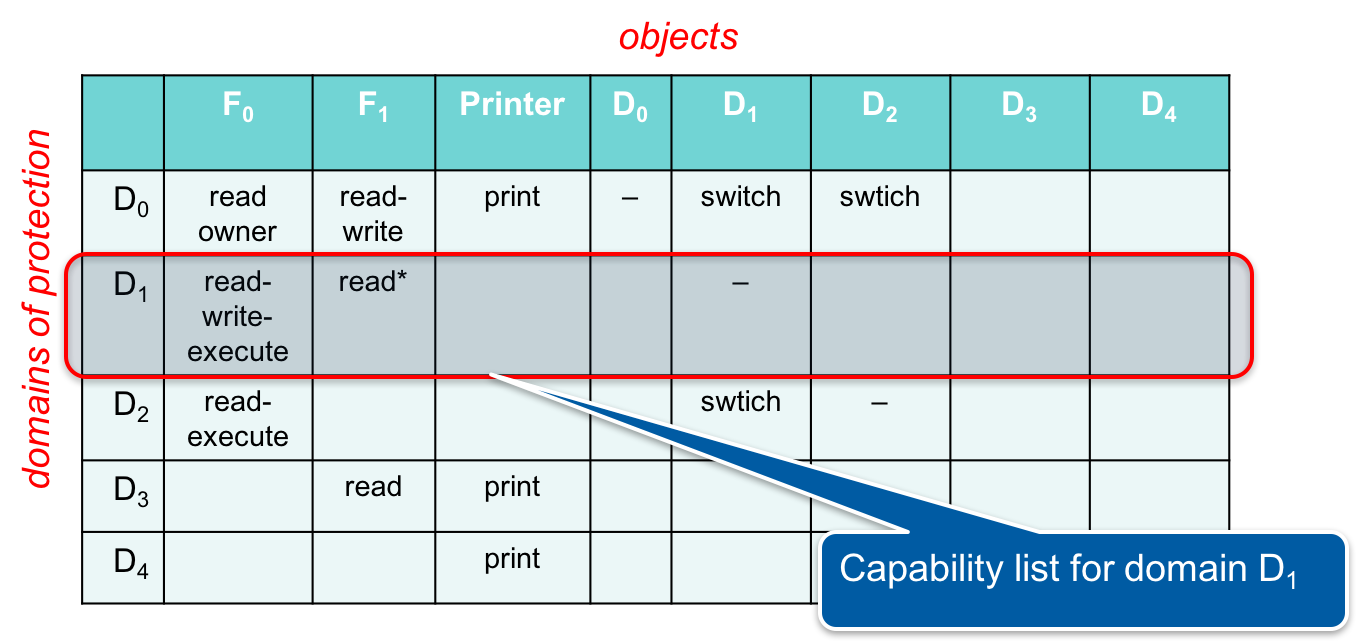

Capability lists

An access control list associates a column with each object. That is, each object stores a list of access permissions for all the domains (subjects). Another way of breaking up the access control matrix is by rows. We can associate a row of the table with each domain (subject). This is called a capability list. A capability is the set of operations the subject can perform on a specific object. Each subject now has a complete list of capabilities.

Before the operating system performs a request on an object, it will check the subject’s capability list and see if the requested access is allowed for that object. A process, of course, cannot freely modify its capability list unless it has a control attribute for all objects in the domain or is the owned of a specific object.

Capability lists have the advantage that, because they are associated with a subject, the system does not have to read any additional data to check access rights each time an object is accessed, as it has to do to read an access control list. It is also very easy to delegate rights from one user to another user: simply copy the capability list. If a user must be deleted, it is also easy to handle that: simply delete the capability associated with that user; there is no need to go through the access control list of every file in the file system.

In practical use, the disadvantages of capability lists in operating systems outweigh its advantages. Changing a file’s access permissions on a global level is incredibly difficult: you have to go through every user’s capability list and modify it. There is no easy way to find all users with access to a resource short of checking every user’s capability list. Capability lists have generally failed to attain mainstream use. However, they were deployed in several operating systems, including:

- Cambridge CAP system: This is one of the earliest and most significant examples of a capability-based computer system, developed in the 1970s at the University of Cambridge. All resources are controlled through capabilities, which are tokens or references that encapsulate both the identity of the object (e.g., memory segments, files, devices) and the allowed operations (e.g., read, write, execute) on that object.

- IBM AS/400: The AS/400 operatign system (now known as IBM i on IBM Power Systems) is not a pute capability system but is fully object-based, where everything—files, programs, devices, even users—are treated as objects. A capability-like mechanism called authority lists provides a way to define what actions a user or process can perform on an object. Each user or process has a list of authorities (similar to a capability list) that defines their access rights to various system objects.

- KeyKOS and its successors, EROS and CapROS: These are capability-based operating systems where every object in the system can only be accessed through capabilities. Each process or subject has a capability list that defines what resources (files, memory, devices, etc.) it can access and what operations it can perform on them.

- seL4 Microkernel: This is a secure microkernel where access control is entirely based on capabilities. Every resource (like memory or device access) is managed through capabilities, making seL4 highly secure and suitable for critical applications like embedded systems in defense or aerospace.

- Google Fuchsia OS: Fuchsia, an experimental OS developed by Google, uses a microkernel architecture called Zircon. While not purely a capability-based OS, Fuchsia’s resource management system is built around capability-like structures for isolating processes and managing access to system resources.

The principle of capabilities is also used in Android’s Binder IPC: the Binder inter-process communication (IPC) mechanism uses capability-based security. Each application process has a list of capabilities that govern what system services or resources it can access. For example, an app might request a capability to use the camera or access the network.

Microsoft incorporated a form of capabilities into Windows (since Windows 2000). The primary access control mechanism on Windows is Access Control Lists (referred to as Discretionary Access Control Lists, or DACL). However, systems need to be able to support centralized account management and authentication via Active Directory servers. Windows uses security tokens to represent the privileges and rights of a user or process. A security token in Windows can be seen as somewhat analogous to a capability in that it defines what a user or process can do in the system. When a process is created, the security token associated with it specifies what resources it can access and what operations it can perform. However, unlike capabilities, these tokens are not passed between processes in the same decentralized way that capabilities are in pure capability-based systems.

Although capabilities haven’t become a part of major operating systems for managing access control to resources, the concept of a capability list is useful with networked services. You often connect to servers that do not know you and where you do not have an account. In such cases, authorization and single sign-on services such as OAuth and Kerberos can provide an unmodifiable message stating who a user is and what operations they are allowed to perform.

The Unix (POSIX) access model

We will first look at how POSIX systems manage permissions. This includes the set of systems that have been derived from or inspired by the original Bell Labs version of UNIX, including Linux, Oracle Solaris, FreeBSD, NetBSD, OpenBSD, macOS, Android, iOS, and many other lesser-known systems.

Access and sharing files

In a shared environment, you would like to be able to set access rules so that multiple people, say members of a project, can share access to the same file. Each file (object) has an owner and a group associated with it. Each user (subject) has a unique user ID and may belong to one or more groups.

File permissions are expressed in the sequence

rwxrwxrwx

where the first rwx group represents read, write, and

execute permissions for the user (owner),

the second rwx group represents read, write, and

execute permissions for the group, and

the last rwx group represents read, write, and

execute permissions for everyone else. For example, running the ls command

with the “long” flag to inspect /usr/bin/ls (the file containing the ls command) shows:

$ ls -l /bin/ls

-rwxr-xr-x 1 root wheel 38624 Dec 10 04:04 /bin/ls

This shows that the file is owned by the user root and belongs

to the group wheel. Access permissions are {read, write, execute}

for the owner and {read, execute} for the group and for everyone

else. The leading - is not a permission flag but indicates

whether the file is a plain file (-),

directory (d), block device (b),

character device (c), or named pipe (p).

The kernel processes access permissions only when the file is first opened and in the following order:

if you are the owner of the file

_only_ the owner permissions apply

else if you are in the group that the file belongs to

_only_ the group permissions apply

else

the "other" permissions apply

Hence, if I have the following file:

----rw---- 1 paul localaccounts 6 Feb 4 19:15 testfile

I cannot read it even though I am a member of the localaccounts group and that group has read-write access to the file.

Execute permission

Note that execute permission is distinct from read permission. For example, you may have execute-only access to a file

$ ls -l secretfile

-rwx--x--x 1 root staff 8492 Feb 4 19:22 secretfile

$ cat secretfile

cat: secretfile: Permission denied

and not be able read it but the operating system will load it and execute it if you run it. By losing read access to the file you lose the ability to copy it or inspect its contents.

Microsoft Windows

Windows provides the same read, write, and execute permissions but also adds specific per-file permissions for delete, change permission, and change ownership.

In addition, users and resources can be partitioned into domains. Domains in Windows refer to administrative boundaries in Active Directory (AD). In a domain, users, groups, and computers are centrally managed, and domain controllers authenticate users and enforce security policies across the network. For instance, the human resources department may manage users while individual departments may manage connected devices, such as printers. Trust can be configured to be inherited in one or both directions. Department domains may trust the user domain but the user domain may choose not to trust the department resources domains.

Unix directories

Directories are implemented as files. They just happen to be files that contain a list of {name, inode number} pairs. Recall that an inode number is the data structure in a file system that contains all the information about a file except for its name7.

However, directory permissions have slightly different meanings:

- write permission means that you have rights to create and delete files in the directory.

- read permission means that you have rights to list the contents of a directory

- execute permission means that you have rights to search through the directory. This differs from read permission because you cannot see what files are in the directory; the operating system is simply willing to access the directory to resolve a pathname.

We can see that directory permissions can give you permission to search through the directory when opening files even if you cannot access the contents. If you have write access to a directory, you can delete any file within that directory, even if you do not have write access to the file itself. Conversely, if you do not have write access to the directory, you cannot delete any file within it, even if you have write access to the file. Your option could be to open the file and destroy the contents by overwriting them or truncating the file, but you cannot make the file itself go away.

User and group IDs

On most POSIX systems8, user ID information, except for the password, is stored

in the password file, /etc/passwd. This file contains lines of text,

one line per subject, with each line containing the following fields:

- User name

- User ID

- User’s default group ID

- User’s full name

- Home directory

- Login shell

For example:

root:*:0:0:System Administrator:/var/root:/bin/sh

paul:x:1000:1000:Paul Krzyzanowski:/home/paul:/bin/bash

Group IDs are stored in a file called /etc/group. Each line contains a group name,

unique group ID number, and a list of user IDs that belong to that group:

wheel:x:0:root

certusers:x:29:root,_jabber,_postfix,_cyrus,_calendar,_dovecot

Changing permissions

The chmod command (or the chmod) system call allows you to change permissions for a file or directory. The command lets you explicitly specify permissions for the user (owner), group, and other. For example, you can set permissions:

$ chmod u=rwx,g=rx,o= testfile

$ ls -l testfile

-rwxr-x--- 1 paul localaccounts 6 Jan 30 10:37 testfile

Add permissions (in this case, we’re adding write access to group and other):

$ chmod go+w testfile

$ ls -l testfile

-rwxrwx-w- 1 paul localaccounts 6 Jan 30 10:37 testfile

or remove permissions (take away write access from other):

$ chmod o-w testfile

$ ls -l testfile

-r-xrwx--- 1 paul localaccounts 6 Jan 30 10:37 testfile

The earliest versions of the command only accepted a bitmap for the permissions you want to set: one octal digit (0–9) for each of the three three-bit groups. The system call requires that and the command still accepts it:

$ chmod 754 testfile

$ ls -l testfile

-rwxr-xr-- 1 paul localaccounts 6 Jan 30 10:37 testfile

Full access control lists (ACLs)

Sometimes groups are not enough and we may want to enumerate access permissions explicitly over a set of users or groups. This list of access rights for a file is called an access control list. An access control list comprises a list of access control entries (ACEs).

Each ACE identifies a user or group along with the access permissions for that user or group. Typically, these permissions are read, write, execute, and append for files and list, search, read attributes, add file, add sub-directory, and delete contents for directories.

A directory may also have an inheritance attribute, which specifies that files created within that directory will inherit specific access permissions (and directories created underneath that directory may inherit another set of access permissions). This helps with the problem of having to create many identical access control lists for a collection of files. Access control entries often also allow the use of wildcards (e.g., “*”) to refer to all users or all groups. For example, consider the following ACL:

pxk.* rwx

419-ta.* rwx

*.faculty rx

*.* x

Access control entries are processed in sequential order.

Users pxk and 419-ta have read, write, execute access to the

file. Anyone in the faculty group has read, execute access and

everyone else only has execute access.

If the order was changed:

419-ta.* rwx

*.faculty rx

pxk.* rwx

*.* x

and user pxk belonged to the faculty group, that user would no

longer have write access to the file because *.faculty has

precedence over pxk.*.

For an example of setting access controls, see the manual page for chmod on macOS or setfacl on Linux.

The reason Unix systems (including Linux) did not originally implement full access control lists is because of the space that access control lists would require. All file attribute data is stored in a fixed-length chunk of data in the file system called the inode. The inode contains information such as the file’s owner, group, create time, last modification time, last access time, size of file, and the blocks in the file system that contain file data. The beauty of the user-group-other mechanism is that it takes a small, fixed length of data (two bytes for the user ID, two bytes for the group ID, and two bytes for the permissions bit mask).

To support a true access control list, we need to have a variable size list. Most operating systems and file systems have evolved to support extended attributes: file attributes that do not fit into the inode structure (e.g., file download URL). These attributes are stored in extra blocks in the file system and are also used to store an access control list.

Extended attributes often give permissions beyond basic read, write, and execute. On POSIX systems, for example, the include:

- Operations on all objects:

- delete, readattr, writeattr, readextattr, writeextattr, readsecurity, writesecurity, chown

- Operations on directories:

- list, search, add_file, add_subdirectory, delete_child

- Operations on files:

- read, write, append, execute

They also include attributes to control inheritance: to define default ACLs for files in subdirectories.

In systems such as Linux, which support both ACLs and the simple user-group-other permissions, the following search order applies:

- If you are the owner of the file, only owner permissions supply.

- If you are part of a group that the file belongs to, then only group permissions apply.

- Search through the access control list entries to find an applicable entry. The rules of the first matching entry apply.

- Otherwise, “other” permissions apply.

Initial permissions

On POSIX systems, each process has a file mode creation mask associated with it. It is called a umask and is set to a bit pattern that reflects the rwxrwxrwx permissions.

In this mask, however, each 1 bit represents a permission that will be disabled from the file. The umask is

applied prior to creating the file to strip away permissions that might have been set by the

user program. For example, we might have code to create a file with read-write permissions for everyone (rw-rw-rw-):

int f = creat("testfile", 0666);

If our umask is 022 (binary = 000 010 010 = --- -w- -w-), then the kernel’s application

of the umask will strip away write permissions for the group and others, resulting in final file permissions

of rw-r--r--. A process can change the value of the umask via the

umask system call. A user can

change the value via the shell umask command, which will apply that umask to

all commands executed from the shell.

Why is the umask important?

It provides a some safeguards: if a program is careless about setting access permissions, umask ensures that a reasonable default is applied (e.g., no write access for anyone but the owner). More importantly, for scripting languages that do not allow us set permissions when a file is created (e.g., perl, bash), the umask mechanism gives us a way to set defaults. Python programs are also subject to this since its open method does not have the ability to specify group or other permissions when creating a file. Neither does Java. In the shell, when you redirect output to a file, you have no way of setting permissions on the resulting file except by defining a umask a priori.

One could argue that you can set permissions after the file is created. For example:

./program > output

chmod 600 output

Java and Perl programming encourages programmers to do the same thing: set permissions after creating a file by using OS-specific calls.

Unfortunately, this introduces a race condition that can open the file to attackers. If an attacker opens the file after it is created but before its permission bits are changed, she has access to the file even if access permissions change. Access rights are checked only when the file is opened.

Changing file ownership

User and group identities are not fixed. A user can give a file away via the chown command (or chown system call):

chown alice testfile

Similarly, the group of a file may be changed as well:

chgrp accounting testfile

The chown system call is also used for changing groups from within a program.

Changing user and group IDs

When a POSIX (e.g., Linux) system boots up, the first process9 runs with root privileges. Root is a special user (user ID = 0) that has administrator privileges. File access permissions do not apply to root, who can read, write, or delete files even if access permissions do not grant that privilege. Root users also have access to certain restricted system calls.

How do processes start with other IDs? For example, how does your shell run with your user ID and your group ID?

A process running as root can change its user ID or group ID to that of another user/group with the setuid and setgid system calls. A login process, for example, runs as root and:

- Requests your credentials: your user name and password

- Validates those credentials to authenticate you

- Once you’re authenticated, the login program reads your user ID, group ID, home directory, and login program (login shell) from the

/etc/passwdfile. - The the login program now changes ownership and launches the shell:

- Change to the user’s home directory:

chdir(user_home_directory); - Change to the user’s group ID:

setgid(user_group_id); - Change to the user’s user ID:

setuid(user_id); - Execute the user’s login program (usually a shell). On POSIX systems, execution does not spawn a new process (fork does that) but overwrites the code in the current process:

execve(user_shell, argv, envp);

- Change to the user’s home directory:

User ID change on program execution

When a user runs a program, it runs with that user’s user ID and, hence, with the privileges of that user.

Sometimes, however, a program may need special access to files, devices, or system calls that the user of the program should not be allowed to access. For instance, a database program reads and writes files that store the underlying data but it is not a good idea to allow users of the database to be able to access those files directly. On some systems, network programs such as ping may need to create raw sockets yet normal users are restricted from doing so.

To address these needs, executable files can have a special permission bit set called setuid. When set, the executable file will run with the user ID set to the owner of the file rather than the user that is invoking the program. This user ID becomes the effective user ID of the process.

The setuid bit can also be used on directories. If a directory has its setuid bit set, all files and sub-directories created within it will be owned by the directory owner rather than the user who created them.

A related access permission bit, called the setgid bit causes programs to run with the effective group ID set to the group ID of the file.

Setuid programs are particularly attractive targets because they allow an intruder to gain privileges of another user, usually the administrator. If a setuid program can be co-opted to execute some other code or access a file that the intruder wants, it may provide a way for the intruder to run arbitrary administrative commands on the system or access arbitrary data.

In Windows, rather than using setuid, similar behavior is achieved through a feature known as User Account Control (UAC) and the use of access tokens. When a program needs to perform an operation that requires elevated privileges, UAC prompts the user for permission or a password (for standard users) to run the program with elevated privileges. This is often used when an application needs administrative rights to perform a task.

Windows also uses a mechanism called “Run as” to execute a program with a different user’s credentials, similar in concept to the sudo command in Unix/Linux. Executable files can be marked to always run as an administrator, which triggers UAC prompts when executed by users without administrative privileges.

Does Windows have setuid?

While Windows does not have a direct equivalent to the Unix setuid mechanism, it provides two alternatives for running programs with elevated privileges or under a different user context.

First, Windows applications can request administrative privileges through User Account Control (UAC). By specifying a requireAdministrator flag in the application’s manifest, the system prompts the user to approve elevation at launch. This interactive model ensures explicit user consent before privilege escalation.

Second, Windows allows processes to be launched under different user accounts using mechanisms like runas, the Task Scheduler, or programmatic APIs such as CreateProcessAsUser. These approaches require appropriate permissions and are typically used in managed or automated environments. Together, these tools provide controlled ways to achieve the kind of functionality setuid offers in Unix, but with a stronger emphasis on explicit privilege management and user awareness.

Principle of Least Privilege

An important design principle when building secure systems is the principle of least privilege, which state that

At each abstraction layer, every element should have access only to the resources necessary to perform its task

An element refers to either a user, a process, or a function. It means that even if an element is compromised (and must be paranoid and assume that is possible), the scope of damage will be limited. Some examples of the principle in action are:

You have the right to kill processes, but only your own. Even if intruders gain your identity, they will not be able to destroy processes throughout the system.

You can read the

/etc/hostsfile, which contains system overrides for the name-to-IP address mappings, but you cannot write it.When you write a program, private member functions are not accessible outside the class. A poorly written program cannot casually invoke these functions.

Some examples that violate the principle are:

A ping program runs as setuid to root since it needs to access raw sockets. Because of this, if it is compromised, a user can do anything on the system. All it needs is access to a raw socket. Instead, it gets full administrative control of the entire system.

A program uses global variables even through only one function ever touches these variables. It creates the risk that other functions may improperly access those variables.

Default permissions on a file set to read-write for all introduces the risk that people who have no business touching the file will modify it.

A mail server running with root privileges because it needs to listen on port 2510 but that gives it access to all other files and devices on the system as well as to privileged system calls.

Privilege separation

Least privilege can be tricky to implement since it requires a thorough understanding of how a program behaves. Moreover, running a process as root is sometimes necessary but imbues an application with incredible power that can be abused. To get around this issue, we can use privilege separation. The principle of privilege separation is to break the program into multiple parts. Each part runs with only the privileges it needs to perform its task. If one part becomes compromised, potential damage is limited to only that component. More importantly, there are usually a small operations that need to be done with high privilege levels, so those components can have a small amount of code, can be audited more easily, and are likely to have fewer potential vulnerabilities.

Privilege separation can be achieved by starting distinct processes, each running under a distinct user ID, that communicate with each other over mechanisms such as named pipes or local sockets. One straightforward way to achieve privilege separation is to run a single process and then split it into two components, one running at an elevated privilege level. Each process is assigned a real and an effective user ID. The operating system determines privileges based on the effective user ID. Most of the time, they are equivalent. However, if an executable file is tagged with a setuid permission bit, it runs with the file owner’s user ID. In this case, the effective user ID (euid) is that of the file owner while the user ID remains that of the user. The operating system remembers who the user is for a process even for setuid programs. POSIX systems provide a system call called seteuid that allows a process to change the effective user ID. Unprivileged processes may only set the effective user ID to the real user ID while privileged processes can set it to any user ID.

We can achieve privilege separation between two components by setting a program with setuid privileges to the highest privilege level needed. When the program starts, it:

Creates a communication link to itself using, pipes, named pipes, local sockets, shared memory or whatever other mechanisms the operating system provides.

Use the fork system call to create a child process. Fork simply replicates the process, so the parent and child are running the same program. The only different is the return value from fork (the child gets back 0 while the parent gets the child’s process ID).

The child will lower its privilege to that of the user by setting the effective user ID to the real user ID:

seteuid(getuid)The parent will now call a function that only handles the high-privilege needs of the application. The child handles everything else.

The parent and child communicate via the inter-process link the established in step 1.

There are variations of this. If you don’t want the risk of having even unused code resident in the high-privilege portion of the service, the parent can simply call execve to run a totally separate program to handle high privilege tasks. Even more simply, if a process can do its privileged operations early on, such as opening a restricted file, in can then drop its privilege by changing to another user ID without having to create or communicate with another process.

Mandatory Access Control (MAC)

With access control lists, subjects are in control: they set access permissions to their objects This is called Discretionary Access Control, or DAC. A user can set access rights to give access to a file to any other subject. For instance a user on a Linux system may run the command

chmod ugo+rw secret.docx

and give read-write access for the file to everyone (u=user, g=group, o=other, r=read, w=write).

Access control lists were designed to work this way. However, this model does not work in environments where management needs to enforce its policies and define access permissions. Also, when access rights are associated with objects, it becomes difficult to turn off access for a specific subject except by locking that user (e.g., disabling their account). That user will always, at the least, have access to whatever “other” permissions are set for each object. The alternative is to go though each object in the system, check if the user is in the ACL for that object, and remove the user from the ACL, and ensure there are no default access permissions for that objects.

Mandatory Access Control (MAC) is a form of access control where policy is centrally enforced and users cannot override the policy. With MAC, administrators are in charge of access permissions.

MLS: Multilevel Security

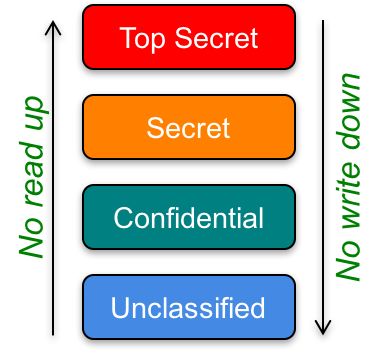

Multilevel security systems were designed to preserve confidentiality via multiple levels of classified data that coexist within one system. The Bell-LaPadula model is the best known of these models. It was originally designed for the U.S. Navy to enable users with different classification levels to use a single, shared computer system. The military uses four classification levels: unclassified, confidential, secret, and top secret. You do not have access to information above your clearance and you cannot create information below your clearance.

With the Bell-LaPadula model, every object is assigned one of those four classification levels. Every subject (user) is also associated with a clearance at one of those levels.

If you have confidential clearance, you can read confidential and unclassified data but you cannot read anything of a higher clearance level: secret and top secret. You can create data at confidential, secret, and top-secret levels (yes, you can create files that you will not be allowed to read) but you cannot create unclassified data. The motivation for this model is confidentiality – the prevention of information leakage. If a piece of software is hacked or a user account is compromised, it will not be able to leak data from higher classification levels.

Bell-LaPadula is summarized as no read up; no write down. You cannot read from a higher clearance level or write to a lower clearance level. It was designed to prevent someone from declassifying information. More formally, the Bell-LaPadula model has three properties:

- The Simple Security Property

:A subject cannot read from a higher security level (no read up, NRU)

- The *-Property (Star Property)

:A subject cannot write to a lower security level (no write down, NWD)

- The Discretionary Security Property

:Discretionary access control (e.g., access control lists) can be used with the model only after mandatory access controls are enforced. This means that you can, for example, block read-write access to your files and users who would have clearance to access those files will be disallowed access.

The ability to write data to higher classification levels brings up the possibility that someone can overwrite a file at a higher classification level. Overwriting the contents of the file would be an attack on availability. To avoid this, systems usually allow overwriting a file only if the process' (subject’s) and file’s security labels match exactly and discretionary access controls permit the write. For example, a user with confidential clearance can only overwrite a file at the confidential level.

The Bell-LaPadula model has a tranquility principle that states that security labels never change during operation. This means objects and subjects always remain at the same classification levels. In practice, systems implement a weak tranquility principle, where security labels may change, but only in a way that does not violate security policy. This is done to implement the principle of least privilege. If a user has top secret clearance, a program will run at the lowest clearance level and get upgraded only when, and if, it needs to access data at a higher classification level.

The Bell-LaPadula model was designed to fit the way the government treats classified data. in practice, it became a complicated model to implement and enforce. For instance, the model does not provide a way to declassify files (lower their clearance level). This can only be done via a special “trusted subject”, who has such authority.

The model is also difficult to use in that it makes collaborative work among people with different classification levels essentially impossible.

Finally, the use of shared servers, databases, and networking makes the model challenging. Can a database store data of varying security levels? If so, that is outside of the visibility of the operating system and must be implemented within the database software. How about email or text messaging? A user should only be able to send messages to users at the same classification level or higher. But users at a higher classification level would not be permitted to respond. The very concept of classification levels works for the military but does not map well to civilian business practices.

Biba Integrity Model

The Bell-LaPadula model was designed strictly to address confidentiality. The Biba model is a similar multilevel security model that is designed to address data integrity. With confidentiality, we were primarily interested with who could read the data and ensuring that nobody at a lower classification level was able to access the data. With integrity, we are primarily concerned with imposing constraints on who can write data and ensuring that a lower-integrity subject cannot write or modify higher-integrity data.

The properties of the Biba model are:

- The Simple Integrity Property

:A subject cannot read an object from a lower integrity level. This ensures that subjects will not be corrupted with information from objects at a lower integrity level. For example, a process will not read a system configuration file created by a lower-integrity-level process.

- The *-property (Integrity Star Property)

:A subject cannot write to an object of a higher integrity level. This means that lower-integrity subjects will not corrupt objects at a higher integrity level. For example, A web browser may not write a system configuration file.

The Biba model feels like the opposite of the Bell-LaPadula model. The Bell-LaPadula model was summarized as no read up, no write down. The Biba model is no read down, no write up. However, note that Bell-LaPadula dealt with confidentiality and Biba deals with integrity. The definition of “what’s important” is different.

Imagine a healthcare system with three levels of integrity:

- High Integrity: Medical records, verified diagnoses, official treatment plans.

- Medium Integrity: Healthcare providers' working notes, draft treatment plans.

- Low Integrity: Patient-submitted forms, unverified data, external information.

With the Biba model:

- A doctor (high-integrity subject) is trusted to modify patient records (high-integrity objects) but cannot write to low-integrity objects (e.g., patient-submitted forms) because that would violate the “no write down” rule. This prevents the doctor from lowering the integrity of the high-integrity medical records.

- A nurse (medium-integrity subject) can update working notes (medium-integrity objects) but cannot directly modify the official medical records (high-integrity objects) due to the “no write up” rule. This ensures the official records are only modified by highly trusted subjects.

- A patient (low-integrity subject) can submit forms (low-integrity objects) but cannot update their own medical records (high-integrity objects) due to the “no write up” rule. This prevents the patient from corrupting the official medical records with unverified information.

In this example, the Biba model ensures that only trusted, high-integrity users can modify high-integrity data, preserving the integrity and trustworthiness of critical information like medical records.

Microsoft implemented support for the Biba model in Windows with Mandatory Integrity Control. File objects are marked with an integrity level:

- Critical files: System

- Elevated users: High

- Regular users and objects: Medium

- Internet Explorer: Low

A new process gets the minimum of the user’s integrity level and the file’s integrity level. The default policy is the Biba model’s NoWrite_Up.

The goal of this policy is to limit potential damage from malware. Anything downloaded with Internet Explorer11 (IE) would be able to read files but would not be able to write them since IE runs at the lowest integrity level and all objects it creates will be at that level … even if the user’s integrity level was a higher value.

As with the Bell-LaPadula model, in practice, Biba also rarely fits the way things work in the real world. Even with Microsoft’s IE, there were far too many times when downloaded content needed to be brought to a higher integrity level (otherwise it was mostly useless). Trusted subjects were allowed to overwrite the security model and do this. Since it happened so often, users got used to (and annoyed by) pop-up dialog boxes asking for permission. Microsoft dropped the NoReadDown restriction to make the system more usable but, in doing so, did not end up protecting the system from malicious content.

Type Enforcement (TE) Model

The Type Enforcement (TE) model is a mandatory access control mechanism that simply implements an access control matrix that gives mandatory access control priority over existing discretionary access control mechanisms..

- The model defines domains and types.

- Domains

- These refer to the security contexts or labels assigned to subjects (usually processes). A domain specifies the set of permissions a subject has and determines how it can interact with other objects in the system. Essentially, a domain governs the behavior of a process and what actions it can perform. Domains apply to subjects (processes).

- Types

- These are the labels assigned to objects (like files, directories, devices, etc.). A type defines how an object can be accessed and which domains (processes) are allowed to interact with it, specifying the access control rules. Types apply to objects (resources).

The Type Enforcement policy defines how domains (subjects) can interact with types (objects), controlling access and operations like read, write, or execute. If the TE policy denies access, discretionary access control rules do not matter; the user may not access the file. If the TE policy permits access, the system then checks the access control list for the object to see whether access to the file was granted by the user.

Role-Based Access Control (RBAC) Model

The Bell-LaPadula model worked for managing information in the military but didn’t fit the real world very well. The Role-Based Access Control model (RBAC) was created with business processes in mind. It is designed to be more general than multilevel models such as Bell-LaPadula (which didn’t map well onto civilian life).

Access decisions are not based on user IDs but are based on roles. A role is a set of permissions that apply to a specific job function. Administrators define roles for various job functions and then users are then assigned to one or more roles.

A role can be thought of as a set of transactions or operations that a user or a group of users can perform within the context of an organization. Note that roles relate to job functions, not specific access permissions. For instance “update customer information” is a role while “write to the database” is not a role. RBAC enables fine-grained access since roles can be defined in application-specific ways: transfer funds instead of read/write account data.

Unlike access control lists, RBAC assigns permissions to roles rather than users. This creates a level of indirection between the subject and the object.

Each user is granted membership into one or more roles based on their responsibilities in the organization. The operations that a user is permitted to perform are based on the user’s current role.

This makes it easy to revoke membership from a user or add new users to a role, simplifying the management of privileges. Administrators can update roles without modifying permissions for every user who has those roles. Similarly, the mapping between roles and objects can also change dynamically. If developers for a certain project no longer needs access to a specific source code repository, that access can be removed from the role and affect all developers on the project. Objects no longer need to have access rules that identify individual subjects.

Good role administration will enforce the principle of least privilege: each role should have the minimum set of privileges necessary to perform the function of that role and nothing more. A user may be assigned multiple roles but have one role active at a time.

RBAC requires the following conditions:

Role assignment. A subject can execute an operation only if the subject has been assigned a role.

Role authorization. It’s not enough for a subject to be assigned a role. The subject must be authorized for a specific role for it to apply to that subject. In some cases, this might require the subject to log into a role or specifically request activation of that role.This ensures that users can only take on roles for which they have been authorized and ones that match their current task. It allows the organization to implement a principle of least privilege since a user will not have all of their roles activated at the same time. A role should be active only as long as the subject needs to perform the necessary tasks.

Transaction authorization. A subject can execute a transaction (operation) only if the transaction is authorized through the subject’s role membership. – The role defines what operations a user can perform.

A role is conceptually almost similar to a group. However, a group is just a collection of users, whereas a role is a collection of permissions. Moreover, RBAC is mandatory: an administrator will decide which objects can be accessed by which users and in what manner. With groups in access control lists, any user may choose any access permissions they want for a group for any object they own.

RBAC is the dominant MAC model in larger corporations, particularly those that need to conform to regulatory audits, such as HIPAA for healthcare and Sarbanes-Oxley for finance. It makes it easy to manage the movement and reassignment of employees. However, a full implementation will require application awareness. For example, databases may need to restrict specific operations based on roles:

- Role A: cannot add or delete users to/from the table

- Role B: can delete users but cannot change the salary of a user

- Role C: can change the salary of a user but not add or delete users

The principles of RBAC are essential to database security since the operating system is not involved in database transactions. Operating system access control sees the word at the system call and file access level. An operating system does not track functional operations such as deleting an employee, canceling an order, or changing a salary. RBAC is used in operating systems but can only provide access to files rather than controlling function that are tied to specific business workflows.

Multilateral security

The Bell-LaPadula and Biba models are classic cases of multilevel security. Subjects and objects are assigned classification labels (or integrity labels in the case of Biba) and rules control what you can read or write.

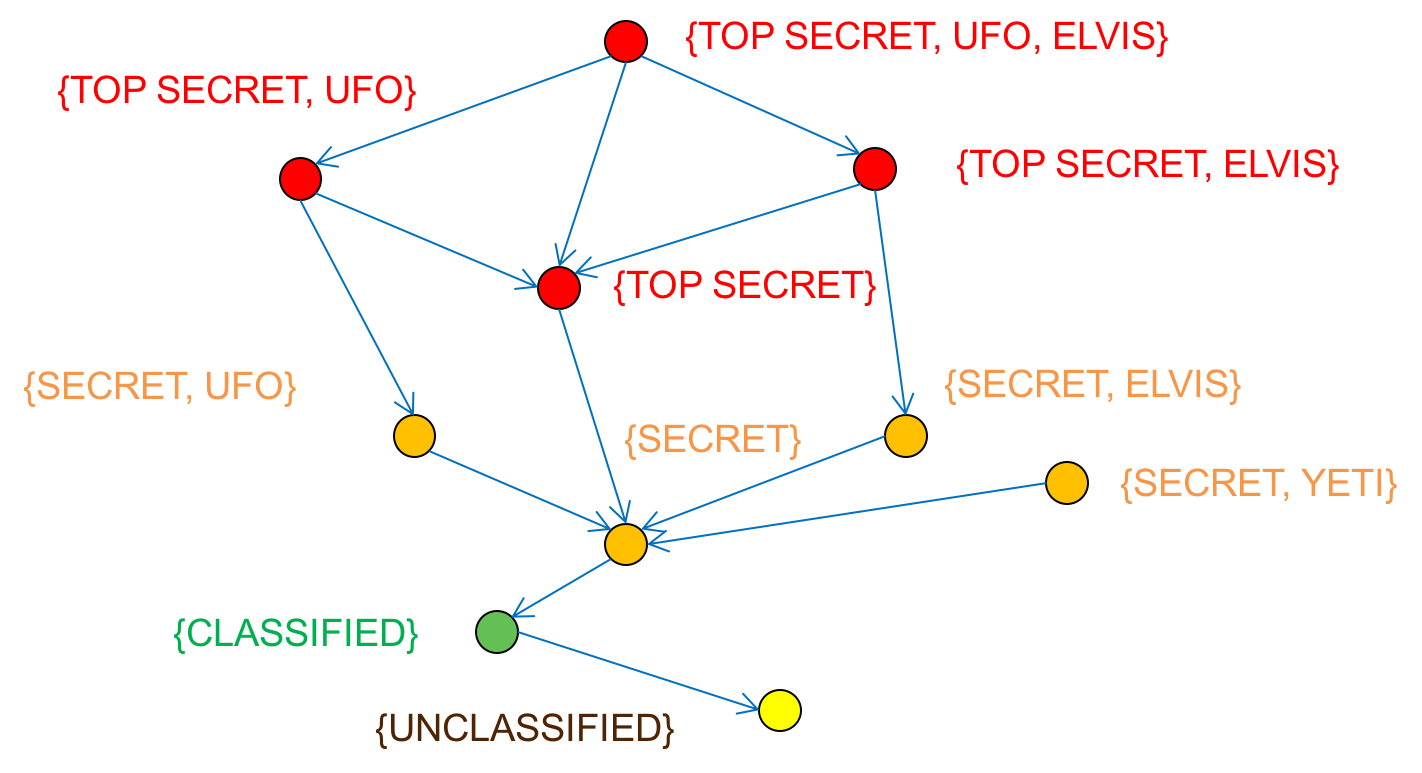

The Bell-LaPadula model, however, doesn’t fully implement how the military deals with classified data. In addition to the four classification tiers (unclassified, confidential, secret, top secret), each security level can also be divided into compartments. This is usually done at the top secret level but can be done at any level. In the government, this is referred to as TS/SCI – Top Secret / Special Compartmentalized Intelligence. Even if you have top-secret clearance, you must be explicitly granted access to each compartment and are not allowed access to data in other compartments. This is a formalized implementation of the “need to know” principle.

Objects in a multilateral security model are not only identified by their clearance level but are further tagged with zero or more security labels (compartments). If you do not have clearance for a specific label, then you cannot access the data regardless of your clearance level. For example, {Top Secret, UFO} data cannot be read by someone with only {Top Secret} clearance. Someone with {Secret, UFO} clearance also cannot read the data: they have the right label (compartment) but a lower clearance level. Conversely, someone with {Top Secret} clearance cannot read {Secret, UFO} data. Even though they possess a higher clearance level, they lack the compartmental clearance: the UFO label.

The lattice model is a way of representing access rights in a multilevel, multilateral security environment. It is used to enforce security policies in systems that require the classification and separation of data based on sensitivity levels and clearances. It is commonly employed in environments where multi-level security (MLS) is essential, such as in government or military settings.

Each node is a set containing a security classification level and zero or more compartment labels (see Figure 10). A directed edge between a pair of nodes identifies read access. For example:

- Someone with {Top Secret, UFO, Elvis} labels can read {Top Secret, UFO} objects.

- Someone with {Top Secret, UFO} labels cannot read {Top Secret, Elvis} objects.

- Someone with {Top Secret, UFO} labels can read {Top Secret} objects.

The relations are transitive: because {Top Secret, UFO} can read {Top Secret} and {Top Secret} can read {Secret}, that means {Top Secret, UFO} can read {Secret}.

A problem with compartmentalization is that it creates more data isolation. Data from two compartments, for example {Top Secret, Elvis} and {Top Secret, UFO} creates a third compartment, {Top Secret, Elvis, UFO}. Real organizations often have thousands of compartment labels, which can create a combinatoric explosion of distinct compartments and does not help with data sharing when it might be useful. One option to limit the compartmentalization somewhat is to change the rules and allow a subject at a higher classification read access to all lower levels. Thus, someone with {Top Secret} clearance would be able to read {Secret, UFO} and {Secret, Elvis} data even without having UFO or Elvis Special Compartmentalized Intelligence.

Chinese Wall model

A Chinese Wall is a set of rules that are designed to prevent conflicts of interest. It is commonly used in the financial industry but is also common in law firms, consultancies, and advertising agencies. For example, banks have corporate advisory groups that work with companies on mergers and acquisitions, debt financing, and IPOs. Banks also have a brokerage group that handles investments. It would be a conflict of interest – and illegal – for an advisory group member to tell someone in the brokerage group about a pending acquisition that will likely affect the stock price of a company. Hence, a Chinese wall is erected between these divisions.

The Chinese Wall model can also implement a separation of duty. To avoid fraud, for example, a person may be permitted to perform any one of several necessary transactions but is explicitly disallowed from performing more than one.

There are three layers of abstraction in implementing a Chinese wall:

Objects. These are files that contain resources about one company.

Company groups. These are the set of files that belong to one company.

Conflict classes. These identify groups of competing company groups. For example {Coca-Cola, Pepsi} could be conflict classes. So can { American Airlines, United, Delta, Alaska Air } or { AT&T, Verizon, T-Mobile }.

The basic rule of the Chinese Wall is that a subject can access objects from a company as long as it never accessed objects from competing companies.

More formally, the Chinese Wall model has two properties:

- The Simple Security Property

:A subject s can be granted access to an object o only if the object is in the same company group as objects already accessed by s or o belongs to a different conflict class

- The *-Property (Star Property)

:Write access is allowed only if access is permitted by the simple security property and no object can be read which is in a different company dataset within the same conflict classs than the one for which write access is requested if it contains unsanitized information.

The term unsanitized information refers to data where the company’s identity is known. In some cases, data may be sanitized, meaning that the company’s identity is disguised to allow generation of bulk analytics or comparisons. For example, you may have access to the average profit margins among all competitors but are not allowed to see the profit margin for any specific competitor.

SELinux (Security Enhanced Linux)

Linux can be augmented with various security modules. SELinux is a Linux security module originally developed by the NSA to introduce Mandatory Access Control (MAC) to Linux. It has evolved into a widely-used security architecture that enforces strong access control mechanisms to secure Linux systems. SELinux ensures that even privileged users, such as root, are subject to strict security policies that cannot be altered or bypassed at their discretion, providing a much more robust security model compared to traditional Discretionary Access Control (DAC) systems.

Type Enforcement (TE)

The core of SELinux is the Type Enforcement (TE) model, which is designed to provide fine-grained access control over all processes and objects in the system. TE is a form of MAC where access control decisions are made based on the type labels assigned to both subjects (processes) and objects (files, devices, etc.).

- Domains: In SELinux, processes are assigned domain labels, which define what actions the process can perform and on what resources. For example, the domain associated with a web server process will define which files it can read, write, or execute, and it will prevent access to files outside of its permitted scope. Domains apply to subjects (processes).