Virtual Synchrony

Paul Krzyzanowski

October 3, 2022

Goal: Combine atomic multicast with failure detection and group membership. The software framework for virtual synchrony gives everyone the same view of live group members, ordered message delivery, and atomic multicasting of messages to all of these members.

Background

Fault tolerance

We often deploy distributed systems in an attempt to achieve both high availability and high scalability. High availability refers to the fraction of time that the system is up and running and capable of doing whatever it is supposed to do. High scalability refers to the ability to expand the system to handle higher amounts of traffic. Both of these goals are often achieved via redundancy: adding replicated components to the system. Redundant components can step in when some components break, thus helping with availability. Active-passive replication means that the redundant components are generally not servicing requests but are ready to take over for a failed component. They will get updates, possibly asynchronously, but will not handle client requests. Active-active replication means that the workload is distributed among all components, thus helping with scalability as well as availability. Each component only handles a fraction of the workload.

We examined fault tolerance previously but understanding the nature of certain faults is crucial to designing robust communication mechanisms. Faults may be either fail-silent or Byzantine. With fail-silent faults, the failed system is effectively dead: it takes no input and produces no output. With Byzantine faults, the system continues to run but runs in a faulty manner, producing incorrect output. With a fail-silent system, the system can remain silent. This is called fail-stop operation. A process that exited or a computer that was shut off are examples of a fail-stop system. Alternatively, we may have an environment where the system comes back online. For example, the failed computer may restarted or the dead process may be relaunched. This is called fail-restart (or fail-recover) operation. The consideration with fail-restart, as we’ll shortly see, is that the recovered process may be unaware of what transpired in the overall system during the time it was dead.

Communication systems may be synchronous or asynchronous. A synchronous system is one where there is a known time limit to delivering, receiving, and processing a message. A USB link is an example of a synchronous system. An asynchronous system, on the other hand, is one where there is no time bound on when a message arrives and gets processed. The IP network is an example of an asynchronous communication system. Arbitrary routes and queueing delays destroy any assurance of fixed time limits. Moreover, process and kernel thread scheduling provides another level of uncertainty for when the packet will make its way through the network protocol stack and to the application.

In designing distributed systems, we generally assume that processes are concurrent, asynchronous, and failure prone. In this environment, we assume we have “correct” processes. If the processes are working, they are working correctly and produce good data. If they fail, they exhibit fail-silent behavior. Mechanisms such as error-detecting codes can be used to detect possible network-based corruption. Communication is assumed to be fail-silent and asynchronous.

We have a fundamental problem in detecting a failure in this environment. The two armies problem (also known as the two generals' problem) provides an illustration.

The general of army A sends a messenger to B saying, “let’s attack at dawn.” The communication line is faulty as the messenger may be killed or captured along the route. Therefore, the A’s general requests that the messenger return to ensure that the message was delivered (this is a message acknowledgment). The messenger does indeed return. Can the divisions attack with confidence that both have the message? Not really.

The general of A knows that B received the message. However, B’s general does not know if the messenger returned successfully to A. If the messenger didn’t make it back to A, then A will not attack and B, fighting on its own, will lose. To fix this issue, B requests the messenger return back to B (acknowledging the acknowledgment). Now B knows that A received the acknowledgment. Are we good?

Unfortunately, not. Now A does not know if the messenger made the trip back to B. If B does not receive the message that A got the acknowledgment, it will not attack. We can continue this process indefinitely, sending more and more levels of acknowledgments. Although we may have a high level of confidence that the messages are being received, we will never know for sure.

The two-armies problem demonstrates that it is impossible to achieve consensus with asynchronous and possibly faulty communication lines. We have no foolproof way of determining whether a process has failed or whether it is alive but either not communicating or not communicating quickly enough. This is a fact of life in distributed systems.

State machine replication

It is usually not sufficient to replicate systems by simply having extra hardware with installed software. We need the state of the software to be synchronized among all the replicas. For example, if a database field, file, or setting is modified on one system, it should be modified on all the replicas. This will ensure that client requests can be processed identically regardless of which server the client contacts.

The set of modifications that take place on any system can be viewed as a state machine. A state machine refers to any deterministic program that takes inputs, produces outputs, and maintains internal state (which may affect the outputs it yields). A replicated state machine is a set of identical state machines (programs) that are currently running on several servers. If each one of them is fed the same set of inputs in the same sequence, each will update its internal data (e.g., file changes or variables) in the same way and produce the same outputs.

We refer to the set of replicated processes running on a set of servers as a process group. The process group enables load balancing as queries can be sent to any server. Modifications will, of course, have to be sent to all replicas but read operations far outnumber writes in many applications. The process group also enables fault tolerance since it is acceptable for some replicas to die as long as others are still running and can handle incoming requests.

For the process group to function correctly, it is important for all the replicas to keep the same state. To do this, each of the processes in the process group needs to receive inputs in the same order. More precisely, causally-related messages should be processed in the same order. Ordering of messages among concurrent client processes should not affect the state of outputs produced by the replicas.

We achieve fault tolerance because we have replicated systems – all processing the same inputs and making the same set of changes. However, if one of the replica processes is dead, it will not receive any updates. When it restarts (for example, the system reboots), its data (state) will not be up to date; the system will be in a state prior to the updates. It has stale state (meaning it has old data).

The two armies problem and our environment of asynchronous, faulty processes gives us an environment where we cannot reliably detect a failed process. For instance, the process may be alive but it might be slow to respond and we may time out waiting for a response and thus assume it is dead. Even if the process is dead, it may recover (i.e., be restarted) at a later time and have stale state.

We can deal with this problem by propagating to the entire group the knowledge that we think a process failed. If we suspect that a process is dead, we will simply take it out of the group. If it recovers (or was just slow to respond previously), it will have to rejoin the group.

Virtual synchrony

Virtual synchrony gives us a model for managing a group of replicated processes (state machines) and coordinating communication with that group. With virtual synchrony, a process can join or leave a group (one of the replicated servers) – or be evicted from the group.

A virtually synchronous system is one where:

- Even though messages are sent asynchronously, they appear to be processed in a synchronous manner.

- The system provides guarantees about the delivery order of messages.

- Nodes in the system have a consistent view of the group membership (i.e., which nodes are part of the system).

Basically, even though the underlying communication between nodes is asynchronous (messages can be delayed, reordered, or lost), the system provides an abstraction layer that gives the illusion of reliable, synchronous operation.

Any process can send a message to the group and the virtual synchrony software will implement an atomic multicast to the group. Recall that an atomic multicast is the strongest form of reliable delivery, ensuring that a message must either delivered to all processes in the group or else none of them can process the message, even if they received it. This all-or-nothing property is central to keeping all members of the group synchronized. Message ordering requirements can often be specified by the programmer – libraries provide different options – but we nominally expect causal ordering of any messages that effect changes so that we can ensure consistency among the replicas.

Virtually synchronous systems are important for several reasons:

Reliability: By ensuring that messages are seen in the same order by all nodes, the system can tolerate failures without causing inconsistencies. If one node fails, others can continue the operation, ensuring that the system is fault-tolerant.

Consistency: The guarantee about the delivery order of messages and a consistent view of group membership means that all nodes in the system have a shared view of the data, ensuring data consistency.

Abstraction for Developers: With the illusion of synchrony, it’s easier for developers to reason about the system’s behavior and design algorithms that work on top of it.

Views

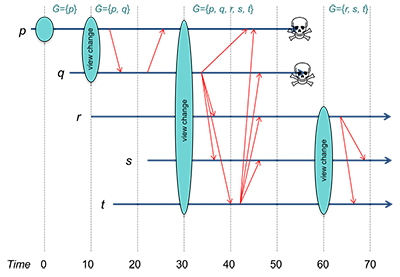

Virtual synchrony defines a group view as the set of processes that are currently in the group. These are live processes that are capable of communication. Each group multicast message is associated with a group view and every process in the system has the same group view. There will never be the case where one process will see a different set of group members than another process.

Whenever a process joins or leaves a group – or is forced out of a group, the group view changes. This change information will be propagated to all group members. After all, we need to ensure that everyone has the same group view. Group view information is shared with a view change message. This is a multicast message that announces a process joining or leaving a group.

Since we cannot reliably detect process failure, we rely on timeouts to assume that a process is dead. If any process times out waiting for a response from a process, a group membership change will take place and that non-responding process will be removed from the group. Since it is no longer in the group, it will not receive any messages sent to the group.

Group membership events

Group members may receive any of three types of events:

Regular message. This message is simply treated as an input to the program (the state machine), although its delivery to the application may be delayed based on message ordering policy and our ability to be sure that other group members have received the message.

View change. A view change is a special message that informs the process of a group membership change. This will affect any multicasts that are generated by the process from this point forward. We will discuss the view change in more detail shortly.

Checkpoint request. If a process joins a group, it needs to bring its state (internal state as well as stored data) up to date so that it contains the latest version and is synchronized with the other replicas. To do this, a process may send a checkpoint request message to any other process in the group. That process will send its current state to the requesting process.

View changes

A view change is a bit more complex than simply informing a process that there are more or fewer members in the group. Suppose we have a view change because a new process is joining the group. At the same time, we have some regular messages being multicast to the group. We cannot allow the condition where some messages may be delivered to the old group and some other messages may be delivered to the new group because the sender received the view change message.

We need to guarantee atomicity: all or nothing behavior. A message m must be delivered to all processes in a group view G before any of the processes in the group will apply the view change message or else it must be delivered to every process in the new group view G'. Reliable processes with this property are virtually synchronous. Any multicast must take place within a specific group view and cannot straddle two views. Hence, a view change acts as a barrier.

Isis toolkit

The Isis toolkit, created at Cornell University, is an example of a fault-tolerant distributed system that provides virtual synchrony. It is designed to provide high membership update rates and demonstrated an ability to handle hundreds of thousands of events per second on commodity hardware back in 2009.

Isis provides distributed consistency. Applications can create and join groups and send multicasts to group members. All applications see the same events in an equivalent order (“equivalent order” generally means a causal order, although that can be configured in the toolkit API). New group members can update their group state to that of an existing group member. View change operations are processed in a sequential and fault-tolerant manner. That is, a view change cannot take place if a previous view change is in progress.

Isis has been used by the New York Stock Exchange, the Swiss Exchange, the U.S. Navy Aegis Weapon System, and many other environments. Systems that are conceptually similar to Isis include Microsoft’s scalable cluster service, IBM’s Distribution and Consistency Services (DCS), CORBA (Common Object Request Broker Architecture; a feature-rich RPC framework), and Apache Zookeeper (a configuration, synchronization, and naming service).

Isis implementation

The Isis toolkit is designed to work with commodity hardware and commodity networks. Message transmission is assumed to be asynchronous (for example, IP). Messages may also be received in a different order than they were sent.

To achieve virtual synchrony, the toolkit must preserve the illusion that events take place in the same order at all replicas. A process in Isis sends multicast messages either via multiple point-to-point UDP messages or the use of IP multicast, if available. One reason that TCP is not used is that, although it implements reliable delivery, it does not send acknowledgments of delivery to the application, which is crucial to the implementation of the Isis toolkit. Also, even though TCP provides reliable delivery thanks to retransmitting lost or damaged packets, we still do not have assurance that all group members will receive a multicast message since there is a chance that the sender may fail before the multicast send has completed.

Isis supports causally and totally ordered multicast options for message ordering:

- Causal ordering (CBCAST protocol):

- This uses vector timestamps in the form of precedence vectors.

- Total ordering (ABCAST protocol):

- Isis implements total ordering without a central sequencer by using a two-phase protocol. Messages are transmitted without ordering. They are queued but not delivered to the application until they are ordered.

Total ordering implementation

Here’s how total ordering is implemented in Isis.

Every machine maintains its own logical clock (sequence counter) that is incremented each time a message is sent or received. This is a Lamport clock and timestamps must be unique, which can be done by appending a unique process ID (e.g., a combination of the machine’s address and process ID) to the value.

Phase 1:

a. The sending process i increments its local clock and multicasts the message m with the timestamp, Ti.

b. Every process j that receives the message updates its local timestamp to the greater of its current timestamp or the timestamp in the message: max(Ti, Tj).

c. The receiving process j increments its clock.

d. The message is queued at priority Tj at the receiver

e. The receiving process sends an acknowledgment for the message to process i with the timestamp Tj

Phase 2:

a. When the sender collects all the acknowledgments, it selects the maximum timestamp of all the acknowledgments: Tm

b. The sender multicasts a message identifying message m and timestamp Tm as being deliverable.

c. Upon receiving the deliverable message, the receiver then moves message m to the delivery queue where it can be delivered in timestamp order to the application.

Group Membership Service

A central component of the Isis toolkit is the Group Membership Service (GMS). This is a network service that maintains the systemwide view of group members. Whenever any process p suspects a failed process q, it reports it to the GMS. The GMS reports the view change to every process that had a connection with q and removes q from the process group. If q is alive (or restarts), it will need to rejoin the group. The GMS maintains the master view of group memberships and propagates that information to all the members so everyone has the same knowledge of group members.

State transfer and view change

When a new or restarted member joins a group, it will need to update itself to get the current state of the system for that group. It does this by sending a checkpoint request message to any existing member of the group. This initiates a state transfer where the group member sends a dump of its state to the new member.

A state transfer is part of a group-wide view change. Even though the state transfer may take some time to complete, it — as well as the overall view change — has to be treated as an instantaneous event by the system. We have to ensure that any messages not yet delivered to any non-faulty processes get delivered before the view change is complete since those earlier messages were sent to members in the previous group. More formally, we have to guarantee that all messages sent to view Gi are delivered to all non-faulty processes in Gi before the view change to Gi+1.

Message delivery

A process will loop through its list of group members and send a message to each member reliably using TCP. However, these received messages cannot be delivered to the application unless the process is certain that every group member has received the message.

In cases where the sending process does not fail (most of the time!), a subsequent message, in addition to carrying the message payload, also indicates that the previous message has been successfully transmitted to all recipients (this is a form of piggyback acknowledgments, where we avoid sending a separate acknowledgment message but rather include the acknowledgment in the next message that we transmit). At that time, a process can deliver the received message so it can be processed by the application. If a process has a received message and has been told that all other group members have also received the message, that message is considered to be stable. Until that time, the received message is considered to be unstable. Stable messages can be delivered to applications; unstable messages cannot be delivered and sit in a hold-back queue.

Message delivery and sender failure

If a process died either partway through multicasting a message (so that only some group members received it) or before it was able to inform the group members that the message was successfully received by every group member, we may have unstable messages sitting in the holdback queue at some processes in the group.

When the death of the sender is detected, the process is removed from the group and a view change will take place. However, we have the situation where messages were sent to some, but possibly not all, group members before the sender died. We need to handle this situation during a view change and figure out how to turn unstable messages into stable messages.

Upon receiving a view change message, a process receives will multicast all unstable messages to the entire group (the group prior to the new group defined by the view change). Effectively, each process takes over the delivery from the original, failed, sender. Note that we may have a flurry of activity with several processes sending identical messages to all group members. Each message must be uniquely identified (e.g., a unique sequence number, such as a totally-ordered Lamport timestamp) and each process must discard duplicate messages.

When each process is done transmitting its unstable messages, it sends a flush message to each group member and waits for an acknowledgment. An acknowledgment means that the receiver has delivered all messages to the application. The view change is complete when the flush messages from all group members have been acknowledged. At this time we know that there are no undelivered messages in any process' hold-back queue that were sent during the previous group view. Any messages sent from this point onward will be sent to the new group view.

Summary

Virtual synchrony provides a highly efficient way to send group messages with atomic delivery, ensuring that all group members are consistently replicated. It is not a transactional system, which would require resource locking and one-message-at-a-time processing. Message ordering policies can be configured in the framework and are generally causal within a view, thus ensuring that related events are consistently ordered at all replicas. A view change acts as a barrier so that all messages that were sent in an earlier view will be delivered within that view.

A group membership service (GMS) provides a consistent view of group membership. If any process suspects a failed process (e.g., because of a timeout to a message), it informs the GMS, which removes it from the group. If any process wants to join a group, it contacts the GMS service as well. Whenever the group membership changes, the GMS initiates a view change.

Every process sends messages to all group members via a reliable multicast (using TCP and looping through a list of group members). Receiving processes can acknowledge these messages asynchronously. When the sender received acknowledgments that every group member has received a specific message, that message is now considered stable and can be delivered to the application. The sender will send messages to the group members informing them which messages are stable. These messages are often piggybacked together with other data that is being sent to the group.

If a sending process dies partway through a multicast, any messages that it has sent and not acknowledged are unstable and may not have arrived at all processes in the group. The dead sender is removed from the group, forcing a view change to take place.

During a view change, each process sends all its unstable messages to all group members and waits for acknowledgments. Any messages that a process receives that are not duplicates are considered stable and are delivered to the application. Finally, each process sends a flush message to the group. A group member acknowledges every flush message it received after it has delivered all of its now-stable messages to the application. When all flushes are acknowledged, the view change is complete.

The biggest deficiency in the virtual synchrony model is its inability to deal with network partitions. Quite simply, the framework assumes that they do not occur. If one group of processes becomes segmented from another, each subgroup will assume that the other subgroup is dead. Each subgroup can then continue operating on its own but will get differing inputs and the state of both subgroups will diverge. We will later remedy the partition problem with consensus protocols.

The software for virtual synchrony must also be designed to support the failure of the group management service. Fortunately, it does not contain any information that group members do not have - group view membership is propagated to all live processes. Hence, any live process can function as a Group Management Service and, if it dies, surviving members can elect another process to take on that role (we will look at elections in the next lecture).

References

Kenneth Birman and Robert Cooper. The ISIS Project: Real Experience with a Fault Tolerant Programming System, TR 90–1138, July 1990, Department of Computer Science, Cornell University. _

Kenneth Birman, Andre Schiper, and Pat Stephenson. Fast Causal Multicast. Tech. rep. Contractor Report 19900012217. National Aeronautics and Space Administration, Apr. 1990.

Kenneth P. Birman and Thomas A. Joseph. Reliable Communication in the Presence of Failures. Vol. 5. 1. Feb. 1987, pp. 47–76. DOI: 10.1145/7351.7478.

Causal Ordering of Messages in Distributed System, geeksforgeeks.org.

Ethan Blanton, Ordered Multicast, Department of Computer Science and Engineering, University at Buffalo. 2021.

This is a 2023 update of an original document written in October 2016.