Group communication

Multicast

Paul Krzyzanowski

October 3, 2022 (updated September 30, 2023)

Goal: Control how we communicate with a group of processes

Introduction

Most of our communication between computers is point-to-point. This is known as unicast. We use unicast to send messages between a client and a server. It’s what we use for implementing higher-level protocols, such as remote procedure calls (RPC) and web services.

A variant of unicast communication is anycast. Anycast was created for IPv6 networking and is also point-to-point communication (as in unicast) but the receiver is the nearest one of possibly multiple receivers with the same address. For example, IPv6 uses this to allow a host to update the routing table of the nearest host. It is also sometimes used for reading data from content delivery networks, where replicated servers store identical copies of content. Anycast is a special purpose form of unicast that we will not discuss here. Because it is part of IPv6 and not IPv4, it is not available in most deployed networks in the U.S.

An alternative to point-to-point communication is point-to-multipoint, or group communication. Group communication is called multicast and it gives programmers the abstraction of sending a single message that is delivered to all group members.

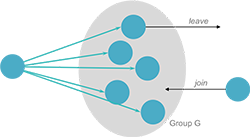

Groups are generally dynamic (Figure 1). They may be created and destroyed. Processes may join or leave groups and processes may belong to multiple groups. An analogy to group communication is the concept of a mailing list. A sender sends a message to one party (the mailing list) and multiple users (members of the list) receive the message. Groups allow processes to deal with collections of processes as one abstraction. Ideally, a process should only send a message to a group and need not know or care who its members are.

Implementing group communication

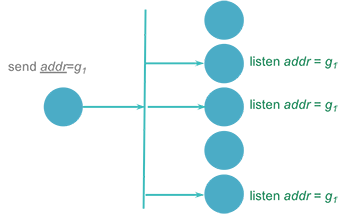

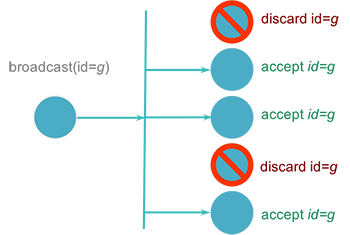

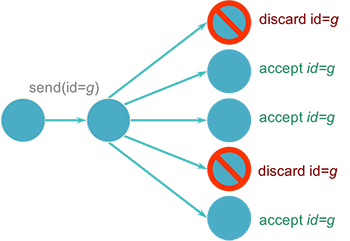

Group communication can be implemented in several ways. Hardware support for multicasting allows the software to request the hardware to join a multicast group. Messages are sent to the multicast address for the group and will be received by all network receivers listening on that group(s) (Figure 2). If the hardware does not support multicasting, an alternative is to use hardware broadcast and add software filters at the receivers. Each message is tagged with a multicast address (some identifier). The software processing the incoming messages extracts this address and compares it with the list of multicast addresses that it accepts. If it is not on the list, the message is simply dropped (Figure 3). While this method generates overhead for machines that are not members of the group, it requires the sender to only send out a single message.

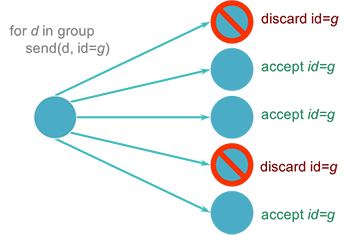

Another implementation option is to simulate multicasting completely in software. In this case, a separate message will be sent to each receiver. This can be implemented in two ways. The sending process can know all the members of the group and send the same message to each group member (Figure 4).

Alternatively, some process can be designated as a group coordinator: a central point for group membership information (Figure 5). The sender will send one message to the group coordinator, which then iterates over each group member and sends the message to each member.

As with unicast communication, group communication also requires a transport-level protocol. Even with hardware support, there must be a mechanism for directing data to the interested process(es).

Remote procedure calls (RPC) were, for many applications, more convenient and intuitive than the send/receive (write/read) model provided by sockets. Remote procedure calls, however, do not lend themselves to group communication. RPC is based on a function call model wherein a procedure is called and a value returned as a result. If we try to apply this to group communication, one message is sent to the group to invoke the procedure. The return value is not clear now, since every member of the group may generate one. RPC does not expect this behavior. We will fall back to using send/receive primitives when working with a group.

Design Issues

A number of design alternatives for group communication are available. These will affect how the groups behave and send messages.

- Closed group vs. open group

- With closed groups, only the group members may send a message to the group. This is useful when multiple processes need to communicate with others in solving a problem, such as in parallel processing applications.

- The alternative is open groups, where non-members can send a message to a group. An example use of this type of group is an implementation of a replicated server (such as a redundant file system).

- Peer groups vs. hierarchical groups

- With peer groups, every member communicates with each other. The benefits are that this is a decentralized, symmetric system with no point of failure. However, decision-making may be complex since all decisions must be made collectively (a vote may have to be taken).

- The alternative is hierarchical groups, in which one member plays the role of a group coordinator. The coordinator makes decisions on who carries out requests. This allows the workload of sending multicasts to be distributed among multiple sub-coordinators, each of which handles sending messages to only parts of a group. Decision-making is simplified since it is centralized. The downside is that this is a centralized, asymmetric system and therefore has a single point of failure.

- Centralized group membership vs. distributed membership

- If control of group membership is centralized, we will have one group server that is responsible for getting all membership requests. It maintains a database of group members. This is easy to implement but suffers from the problem that centralized systems share – a single point of failure.

- The alternative mechanism is to manage group membership in a distributed way where all group members receive messages announcing new members (or the departure of members).

Several problems can arise in managing group membership. Suppose a group member crashes. It effectively leaves the group without sending any form of message informing others that it left the group. Other members must somehow discover that it is missing.

Leaving and joining a group must be synchronous with message delivery. No messages should be received by a member after leaving a group. This is easier to achieve if a group coordinator/group server is used for message delivery and membership management.

A final design issue is fault tolerance. If the processes, the computers they run on, and/or the network die so the group cannot function, how are things restarted?

There are two considerations in implementing group communication (multicast): reliability and message ordering.

Message receipt versus message delivery

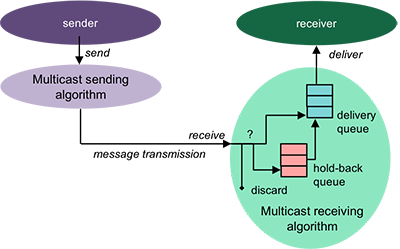

We talk about a process receiving a message, which means that it is received from the network and handled by a multicast receiving algorithm. This algorithm decides when to deliver the message to the application logic.

Since receivers cannot control the order in which messages are received over the network, a layer of software is responsible for transmitting a multicast message and, at the receiver, receiving it and deciding when and if to make it available to the application (i.e., deliver it). When a message is received, the multicast receiving algorithm may take one of three actions:

Discard the message. This may be done if the receiver is no longer a member of the group or if the message is a duplicate.

Deliver the message. The message is placed into a FIFO (first-in, first-out) queue from which the application reads incoming multicast messages.

Hold the message. The message is not ready to be delivered to the application. Most likely, this is because it has not arrived in the expected order and the algorithm must first receive an earlier message. Alternatively, it may need to be held to get feedback that all other members have received it before passing it to the application. In cases like this, the message is placed on a hold-back queue. When the next message is received, the algorithm may check the hold-back queue to determine whether any held messages can now be delivered or discarded.

Message Reliability

Atomic multicast

One desirable property for certain types of group communication is ensuring that all group members get a message. More specifically, if a message is sent to a group and one member receives it, that member can be sure that all members will get the message. This is an all-or-nothing property: the message either arrives correctly at all members or else it is not delivered to any member. There will never be a situation where some members receive the message and others do not. This property is known as atomicity and this type of multicast is called an atomic multicast. An atomic multicast is appealing because it makes application design easier in that there is one less thing to worry about – missing or partially delivered messages.

While this property is desirable, it is not easy to achieve. To be certain that a destination received a message, it will have to send back an acknowledgment (ACK) message upon receiving the message. This is prone to problems since some replies can be lost, the sender may have crashed after sending the message and cannot process the replies, or the receiver crashed before it could send a reply. What we need to do to achieve an atomic multicast is to ensure that we can deliver messages even with process failures.

Implementing atomic multicasts

There are several ways that we can achieve an atomic multicast. One way is to use a persistent log. This is an approach adopted n the two-phase commit protocol used by many distributed databases and transaction processing systems. The persistent log is simply a series of messages written onto a disk or some non-volatile memory so that it can be recovered even if the process or entire system dies and later restarts. Should a process die, it is responsible for reading the log when it comes up again.

In this system, the sender writes the message to the log. This way, even if it dies it will be able to recover and retrieve the message it needs to send. It sends messages to all members of the group and waits for an acknowledgment from each member. The sender saves a copy of the message in the log and also logs acknowledgments from receivers. This way, even if it dies, it can resume where it left off once the process is restarted. If an acknowledgment has not been received from a member, the sender will retransmit periodically until the member acknowledges the message. On the receiving side, a group member logs the received message into its persistent log upon receiving the message and prior to sending the acknowledgment. If the group member dies now, it will have the message in its when it restarts. When all members have acknowledged receipt of the message, the sender can then send a “deliver” message, instructing each member to deliver the message to the higher layers of the software that will process the message.

This solution is somewhat troublesome to implement in terms of logging and recovering from failed processes. It is also time-consuming since messages have to be written to a permanent log instead of sitting in memory. The essential point is that the protocol must account for the sender crashing after it sent some or all of the messages and for receivers that may be dead at any point during the multicast.

Another approach to multicast is the one adopted in virtual synchrony (which we will examine later). Here, we accept the fact that receivers can die and do not wait for them to recover. Instead, we redefine the group to exclude them and make sure that a multicast message reaches all members of the new group. If a receiver recovers, it will have to rejoin the group and update its state as necessary. The thing we have to deal with now is the fact that the sender might die partway through the multicast.

To account for the sender’s death, it can tell all group members that every member received a message when it is done. Until they get this message, each group member must hold on to a copy of the message. If the sender dies partway through the multicast, all receivers that have these incompletely delivered messages will take on the task of transmitting them to all group members and then telling the members that the entire group received the message.

Reliable multicast

A compromise to atomic multicast is to assume that the sending process will remain alive to ensure that a message was sent out to all members of the group. This is called a reliable multicast. It is a best-effort attempt at reliability but makes no guarantees in the case where the sender is unable to transmit or receive messages to other group members. One implementation can be:

- Set a long timer, TL. This will be used to detect a non-responding process.

- Set a shorter timer, TS. This will be used to detect lost messages or lost acknowledgments.

- Send a message to each group member.

- Wait for an acknowledgment message from each group member.

- If timer TS goes off, then retransmit the message to members that have not responded, reset the timer, and wait.

- If timer TL goes off, then label the non-responding processes as “failed” and (optionally) remove them from the group.

In the best case, if multicast or broadcast facilities are available, the sender needs to only send one message. If these facilities are not available, they can be simulated:

for (dest in group)

send(dest, message)

Each recipient sends one message as an acknowledgment.

A problem with acknowledgments, even in unicast communication, is that they introduce extra traffic on the network since each message has a corresponding acknowledgment. They also introduce delays since a sender will wait for an acknowledgment before sending the next message. With multicast, the problem gets worse because we expect an acknowledgment from each group member. Even if we use hardware mechanisms that allow us to send a single message, we still expect multiple responses. This problem is called feedback implosion.

The overhead of acknowledgments can be optimized in several ways:

Pipelining is a technique where a sending process can send a sequence of messages without waiting for an acknowledgment of the receipt of one message before sending the next one. Acknowledgments (ACKs) are received asynchronously. The sender must store the message until it is acknowledged by each group member - or until it decides the group member is unreachable.

Cumulative ACKs are a technique where the receiver does not send an acknowledgment immediately but instead waits to see if other messages come from that sender. If they do, the receiver can send one message to acknowledge the receipt of several packets.

Piggybacked ACKs take advantage of the fact that communications are often bidirectional: a process sends a message and the receiving process sends a response. The acknowledgment can be placed within the response packet instead of sending it as a separate packet.

TCP uses all three of these techniques.

We can also increase performance by having the receiver contact the sender only if it missed a message. This is a technique of feedback suppression. The sender maintains a count of the number of messages sent. This count is appended to each message sent and acts as a message sequence number. Recipients send no acknowledgment message unless the sequence number indicates that a message was missed. This is a negative acknowledgment (NACK).

Optimization: feedback suppression

An optimization in the case of multicast transmission is for a receiver to multicast the NACK. That way, other receivers will not have to send a duplicate NACK for the same message. To avoid the situation where a lot of receivers discover they missed a message and simultaneously send a NACK, the algorithm can add a small random delay before multicasting a NACK response. That makes it highly probable that one (or a very small number) process will send its NACK first and others will receive it and thus stay silent.

For the protocol to work, the sender is responsible for keeping copies of old messages for retransmission. The problem with this protocol is that the sender has no way to detect that a process is no longer responding and therefore hasn’t received messages. Also, since delivery times are unpredictable with asynchronous networks, the sender has to, in theory, store all messages forever on the chance that some process sends a negative acknowledgment for a specific message.

Hierarchical feedback control

Another technique to avoid feedback implosion is to partition a group of computers into multiple subgroups and organize them into a tree structure. The sender is at the root of the tree or relays messages to the root. The root of each subgroup serves as a local coordinator for the systems below it and is responsible for receiving acknowledgments (ACKs) and performing retransmissions only within the subgroup. This technique brings in the complexity of organizing subgroups (which can be complicated when group members are added and removed), encapsulating messages so they can be addressed to a coordinator, and software to actually perform the forwarding.

Unreliable multicast

If the reliable multicast is deemed too costly, the next step down is the unreliable multicast. This is the basic multicast in which a message is sent and the process just hopes that it arrives at all destinations. It is useful for services that don’t require reliability (e.g., multicast video and audio). It is also useful in cases when the sender does not know the identity of the group members.

If multicast or broadcast facilities are available, the sender needs to only send one message. The recipients need to send nothing. If these facilities are not available, they can be simulated as mentioned above.

Message ordering

To make group communication easy to use and understand, two properties are desirable:

- atomicity: message arrives everywhere

- first-in-first-out (FIFO) message ordering: consistent message ordering.

Suppose we have a group of four processes {0, 1, 2, 3}. Processes A and B, outside the group, send messages to the group at approximately the same time via multiple unicasts. A sends message MA and B sends a message MB

Process A: MA → P0, MA → P1, MA → P2, MA → P3

Process B: MB → P0, MB → P1, MB → P2, MB → P3

If each process receives the sequence of messages {MA, MB} then we have consistent, or good, ordering. On the other hand, if some processes receive MA followed by MB while others receive MB first and then MA, we have inconsistent, or bad, ordering.

Total ordering

To avoid confusion and potential problems, it is desirable to have all messages arrive in the exact orders sent. This is known as global time ordering. It is not feasible to implement global time ordering. Messages can originate from multiple computers at the same time. Also, messages from closer computers can arrive earlier than later messages sent from more distant computers. We cannot count on timestamps being so precise and unique that each receiving system can make the same comparisons.

A compromise it to say that if two messages are relatively close together, all receiving systems will choose one of them as being “first.” All messages will arrive at all group members in the same order (which may or may not be the exact order sent). This compromise is called consistent time ordering or total ordering.

One algorithm for achieving total ordering is:

Assign a unique totally sequenced message ID1 to each message.

Each message is regarded as stable at an element if no message with a lower ID is expected to arrive. When messages can arrive out of order, the system will accept such messages but not forward them to the application. A message is stable at an element when the system has received all earlier messages and passed them on to the receiving process. Any message that is stable at an element can be immediately passed on to the receiving process. This ensures in-order delivery. Any other messages are buffered until the out-of-order messages are received.

The communications driver passes only stable at an element messages to the application, passing the message with the lowest ID first.

Each member saves all messages in a queue for delivery to applications.

One problem that arises in implementing this protocol is that of generating a message identifier since we need a shared sequence of identifiers. A few solutions can be adopted:

Use a sequencer, a common process to which all multicast messages are sent. The sequencer receives a message, attaches a sequence number, and then resends the message to the group members.

Use a sequence number server. A process will first contact the sequence number server to request a sequence number. The process will then attach the sequence number to the message and multicast it.

Alternatively, one can come up with a distributed protocol for generating unique, monotonically increasing message identifiers.

Partial (causal) ordering

We can relax ordering rules further and dictate that ordering will be preserved only amongst related messages. Causal ordering means that messages that are causally related (according to Lamport’s happened before definition) will be delivered in order to all group members. Concurrent messages may be delivered in any order.

One way of implementing causal ordering is by having each process keep a precedence vector and sending it along with every message that is sent to the group. A precedence vector is similar to a vector timestamp two exceptions:

- The event counter (process' position in the vector) is not updated for received messages.

- Other elements are not set to the maximum value of each element when comparing the two vectors.

The vector applies only to events that relate to sending messages to a specific group. Each entry in the precedence vector represents the latest message sequence number that the corresponding process (group member) knows about.

When a process Psender sends a message, it increments the element of the vector that corresponds to its own entry:

Vsender[sender] = Vsender[sender] + 1

This vector is sent along with the message.

When a process Preceiver receives a message from Psender, it checks two conditions:

(1) The message must be the very next message from Psender. That is, the value of the sequence number in the received vector that corresponds to Preceiver must be exactly one greater than the one we have for that process in our vector:

(Vsender[i] == Vreceiver[i] + 1) ?

(2) The message should not be causally dependent on another message that the receiver has not yet seen. This means that every other element of the vector has to be less than or equal to the corresponding element of the vector at the receiver.

∀i, i ≠ sender: (Vsender[i] ≤ Vreceiver[i]) ?

If the vector from the sender contains some sequence number that is greater than a corresponding sequence number in the receiver’s vector, that means that the sending process has seen a message from some other process that the receiver has not yet processed. Since the precedence vector is used for group communication and the receiver is part of the group, that means the receiver needs to wait for that message to come.

If both conditions are satisfied, then the received message can be delivered to the application immediately. Otherwise, it is placed in the hold-back queue until the conditions can be satisfied. Causal ordering has the advantage that there is no need for a global sequencer as in total ordering. Note that the precedence vector technique requires reliable message delivery. Otherwise, the receiver will not know if a message was lost or if a message has just not yet arrived.

Sync ordering

Relaxing the requirements more, we can decide that ordering does not matter at all and messages can be received in a different order at different machines. However, we can provide a special message type: a synchronization (sync) primitive that can be sent to ensure that any pending messages are processed before any additional (post-sync) messages will be accepted. This means that if a message is sent, it will be processed by all members before the synchronization operation. Any message sent after a member sends a sync message will be processed by all members after the sync. Message delivery is not split on either side of the sync. A sync is also known as barrier. This type of message ordering is known as sync ordering.

Single-source FIFO

FIFO (first in, first out) ordering on messages from each sender ensures that messages from each source are delivered in order but messages from multiple sources may be interleaved in any order at the receiver. For example, if host A sends messages m1, m2, m3 and host B sends messages n1, n2, n3, it is valid for host C to receive the sequence m1, m2, m3, n1, n2, n3 and for host D to receive the sequence m1, n1, n2, m2, n3, m3.

Unordered multicasst

Finally, the most relaxed form of message delivery is the unordered multicast. Messages can be delivered in a different order to different members. We may impose sequential ordering per source, which means that all messages sent from one member will be received in the order sent by all members, although members may receive different interleaved messages from others.

IP multicasting

IP multicasting is designed, like IP, to span multiple physical networks. Membership is dynamic: a machine can join or leave a multicast group at any time. Moreover, there is no central coordinator and no restriction on the number of hosts that can be in a group. Multicasting provides network efficiency. Packets in a multicast stream only need to be replicated when a router needs to send them to multiple network links. Only one stream of packets is needed on any network segment regardless of the number of receivers.

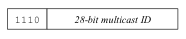

An IP multicast address (also known as a class D address) is

an IP address that starts with 1110 and contains

a 28-bit multicast address.

This spans the IP addresses from 224.0.0.0 through 239.255.255.255 (Figure 6). The set of all machines listening to a particular multicast address make up a host group.

These machines can span multiple physical networks. Membership is dynamic – a machine can leave or join a group at any time and there is no restriction on the number of hosts in a group. A machine does not have to be a member of the group to send messages to the group.

A host may join this address and receive messages addressed to that multicast ID.

A multicast address may be chosen arbitrarily, but some well-known host group addresses are assigned by the IANA (Internet Assigned Numbers Authority). IANA information can be found in RFC 1340. This is similar to port numbers: arbitrary ports may be chosen but certain numbers are reserved for known applications. For example, some well-known ports are 21 for FTP, 25 for SMTP, 80 for HTTP. Some well-known multicast addresses are 244.0.0.1 for all systems on this subnet, 224.0.1.2 for SGI’s Dogfight, and 224.0.1.7 for the Audionews service.

LAN multicasting

Since IP is a logical network built on top of physical networks, let’s examine how multicasting works on LAN cards (e.g., an ethernet card). LAN cards that support multicast support it in one of two ways:

Packets are filtered based on a hash value of the multicast hardware address (some unwanted packets may pass through because of hash collisions.

The LAN card supports a small, fixed number of multicast addresses on which to listen. If the host needs to receive more, the LAN card is put in a multicast promiscuous mode to receive all hardware multicast packets.

In either case, the device driver must check that the received packet is really the one that is needed. Even if the LAN card performed perfect filtering, there may still need to be a need to translate a 28-bit IP multicast ID to the hardware address (e.g. a 48-bit ethernet address). The translation of IP multicast ID numbers to ethernet addresses is defined by the IANA (Internet Assigned Numbers Authority), which decrees that the least significant 23 bits of the IP address are copied into an ethernet MAC address of the form 01:00:5e:xx:xx:xx.

IP multicasting on a single network

On a single physical network, the sender specifies a destination IP address that is a multicast address (class D). The device driver then converts this address to a corresponding ethernet address and uses this address in its hardware header (which envelopes the IP header). Now it sends out this multicast ethernet packet which contains a multicast IP packet within it.

An IPv4 multicast address is mapped onto an Ethernet multicast address by copying the least-significant 23 bits of the address onto an Ethernet multicast address. Within a LAN, an ethernet chip is programmed to perform an exact match on a small set of addresses or to accept addresses that hash to particular values. The ethernet driver will need to remove any unneeded addresses that pass through. The ethernet chip can also be set to multicast promiscuous mode, where it will accept all multicast ethernet packets.

When a process wishes to receive multicast packets, it notifies the IP layer that it wants to receive datagrams destined for a certain IP address. The device driver has to enable reception of ethernet packets that contain that IP multicast address. This action is known as joining a multicast group.

Upon receiving such packets, the device driver sends the IP packet to the IP layer, which must deliver a copy of the packet to all processes that belong to the group.

IP multicasting beyond the physical network

When IP packets flow through multiple physical networks, they go through routers, which bridge one network to another. In the case of multicasting, a multicast-aware router needs to know whether there are any hosts on a physical network that belong to a multicast group.

The Internet Group Management Protocol (IGMP, RFC 1112) is designed to accomplish this task. It is a simple datagram-based protocol that is similar in principle to ICMP. Packets are fixed-size messages containing a 20-byte IP header, and 8 bytes of IGMP data. This data includes:

- 4-bit version number

- 4-bit operation type (1=query sent by router, 2=response)

- 16-bit checksum

- 32-bit IP class D address

The IGMP protocol works as follows.

To join a multicast group (that is, to start receiving messages that are sent to that address), the host will use IGMP to send a multicast join message (also known a membership report) to join a specific group. A multicast-aware router will get this message and now know that the link on which the message arrived needs to receive any packets addressed to that multicast group.

Periodically, a router will send a membership query message to all hosts on the LAN. If any node is still interested in the group, it must re-send a join message. When a machine receives an IGMP query, it sends one IGMP response packet for each group for which it is still interested in receiving packets.

In version 1 of the protocol, if no join messages are received, then the router would stop responding to join messages and the LAN will no longer receive packets for that group. With IGMP v2, a leave message was added to avoid having to wait for the timeout to realize that nobody is interested in a group. That avoids needlessly sending multicast traffic on a LAN where no hosts are interested in receiving the messages anymore.

A lingering problem was that multicast IP uses no centralized coordinator and anyone can send multicast messages to any multicast addresses. IGMP v3 added the ability for a host that joins a multicast group to specify the source address (originator) of the multicast. The router will then not forward packets originating from unwanted source addresses onto the LAN.

IGMP allows edge routers (those routers connected to LANs) to be told what multicast groups the nodes on its connected LANs are interested in receiving PIM, Protocol Independent Multicast, is responsible for conveying multicast membership information among routers within the wide-area Internet. It assumes the presence of other protocols to know the network topology and how routers are connected together. There are two basic approaches to multicasting on the WAN (wide-area network): dense mode (flooding) and sparse-mode multicast.

Dense Mode multicast, also known as flooding, originates from the multicast sender. The message is duplicated and sent to all connected routers. Each of those routers, in turn, duplicates and sends the message to all of its connected routers, and so on. To avoid routing loops, each router uses reverse path forwarding (RFP). A received packet is forwarded only if it was received via the link that the router knows is the shortest path back to the sender (it finds this by checking its forwarding table, which is what it would use if it was sending a packet to that address). PIM Dense Mode floods the entire network of connected multicast-aware routers.

If an edge router receives this multicast packet and is not interested in the data stream (i.e., it has not received IGMP join messages), it will send a prune message to the router that delivered that packet. If that router receives prune messages from all interfaces, it will in turn send a prune message to the router that is sending it the multicast messages. A router sends prune messages if it is getting redundant traffic from another link or if its downstream router or LAN connections are not interested in the stream. If a node on a LAN joins a multicast group at a later time, sending an IGMP message to a router, that router would then send a PIM graft message to its connected routers to state interest in the stream. Dense mode only makes sense when there are receivers spread through most locations covered by multicast-aware routers. It is rarely used.

In contradistinction to Dense Mode, PIM Sparse Mode starts with requests from multicast receivers rather than flooding the network with traffic from the sender. Each router must send a join message to its connected routers in order to request multicast traffic (and a prune message when it no longer is). This causes multicast packets to only go to the routers where it is needed. The trick to getting this to work is that a multicast group must be associated with a router known as a rendezvous point (RP). The RP acts as a central point that senders know how to contact to register that they are transmitting multicast streams and for receivers to contact to join multicast streams. Join messages initially are routed to the RP to avoid flooding the network. From there, they are routed to participating routers – routers that expressed an interest in that multicast group.

A sender that transmits multicast packets will simply have those packets routed only to the rendezvous point (this is effectively a unicast stream). The RP then multicasts the packet to any routers from which it has received Join messages.

To ensure that the RP does not become a bottleneck, after the aggregate bandwidth exceeds a defined threshold, routers closer to the receiver will try to join routers that are more directly connected to the source since they have seen the multicast traffic and know the source address.

Sparse mode is ideal when the receivers are concentrated among a few network segments.

References

George Coulouris, Jean Dollimore, Tim Kindberg, and Gordon Blair, Distributed Systems: Concepts and Design. Addison-Wesley; 5th edition, May 7, 2011.

W. Richard Stevens, TCP/IP Illustrated Volume 1. Addison-Wesley Professional, December 1993.

Andrew S. Tanenbaum and Maarten Van Steen, Distributed Systems: Principles and Paradigms, Second Edition. Prentice Hall, October 2006.

Andrew S. Tanenbaum, Distributed Operating Systems. Dorling Kindersley Pvt Ltd, January 30, 2009.

Web References

Darrin Woods, Tutorial: The Wizardry of Multicast. Network Computing, February 19, 2001.

Mark Handley and Jon Crowcroft, Internet Multicast Today. The Internet Protocol Journal, Volume 2, No. 4., Cisco Systems, 1999.

Internet Protocol Multicast, Cisco Wiki.

RFC 1112: Host Extensions for IP Multicasting (IGMP, version 1)

IPv4 Multicast Address Space Registry, Internet Assigned Numbers Authority.

John Kristoff, Anycast Addressing on the Internet. Kuro5hin.org, Jan 2004.

This is an update of a document authored on October 25, 2012.

-

By a totally sequenced message ID we mean that all members of the group get unique, chronologically increasing sequence numbers for their messages. ↩︎